Eulag

Eulag

All-Scales Geophysical Research Model |

|

|

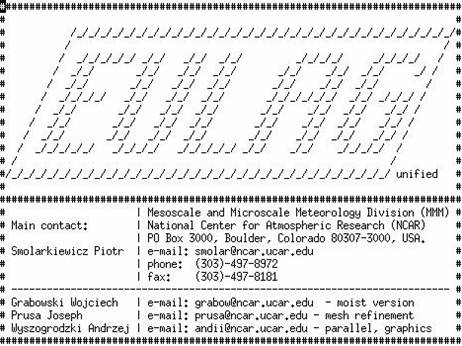

The script file which contains EULAG model is written in Unix C-shell and has the following structure: 1) Header containing the information about the current model version and main contact information:

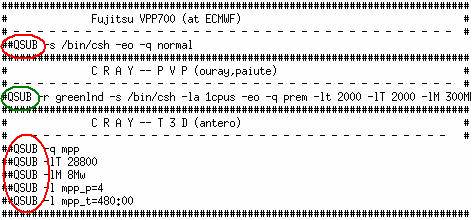

2) Default queuing options required on some known systems. All information within this section start with the hash characters (?#?). You may send the whole script to the queue system, with one of the options to be activated. The active option (circled green) must contain single hash before QSUB, while the double hash (circled red) comments it out. Most current systems require compilation to be done at the front-end nodes before sending the job to the queue so these options are obsolete.

3) Shell parameters required for proper setup on different computer architectures. Some of the known systems are automatically detected at NCAR locations and the proper setup for these systems is predefined. At other systems each user is required to set the appropriate options before run the script. The section begins with the following label and following options must be properly set:

a) MACHINE - defines some default options on particular computer architecture, (see script for the known examples of architectures). For architectures not on the list user is required to define new option, e.g. for the Linux-Cluster:

b) Options for selecting the working directory: WORKD - working directory below the user level JOBNAME - job name to define subworking directory OUTPUTDIR - output directory where the output and graphics are copied DIR - final working directory The script may detect the user name so the same script may be used to set unique working locations for different users, e.g. on sdf1.rap.ucar.edu LINUX system: setenv WORKD /raid

The final location of the working directory is created under DIR variable name. The output directory is by default set as the script location directory (${cwd }), however on some systems the working directory DIR and the output directory OUTPUTDIR are set to be the same by default because of different system restrictions. The script check whether DIR location exists (otherwise create it) and change directory to DIR:

if(! -d $DIR ) then

After changing location to working directory user may copy all required input files, e.g. topography, surface parameters, data sets which contain environmental profiles used for model initialization and boundary conditions. c) Parameters required by complier to work under different environment (serial or parallel) or different compiler options and libraries: MESSG - set SERIAL/PARALLEL job mode, see script for more requirements and system availabilities. Note the SHMEM option is not further developed due to lack of supported computer architectures. Chose MPI for parallel work, or ONE for working in serial mode:

NPE N - Nr of processors for MPP mode (if MESSG ONE => NPE = 1) NCPUS N - Nr of processors for multitasking (requires changes in compiler options, and most of the current systems do not support this mode, keep the default setting with 1 processor). WORD - type length of default floating point (real) word, you may select:

SIGNAL_TRAP - trap error signal (rarely used) PERFM - test model performance (rarely used) d) The following parameters are required by queue systems at some architecture. BATCH - option used to either send job to the queue or to work in interactive mode. In most of the NCAR systems user is required to work in the batch mode. These parameters are further used in the scripts to send to the queue systems (run_paral for parall jobs, run_serial for serial jobs):

NTIME - Set time for your batch job, formats: HH:MM:SS or HH:MM PROJECT - Project number for charges QUEUE - Queue name (see NCAR queue names above) NNODE - Number of nodes (see queue system manual for details) DNODE - Number of processors per node (only on bluesky system) e) Shell options for graphics - see script for additional requirements and restrictions. ANALIZ - set either production run or postprocessing analysis

NCARG - Plot with Ncar Graphics output - 0 - no plot COLOR - Color Plot with Ncar Graphics output - 0 - black/white plot TURBL - Turbulence Statistic with Ncar Graphics - 0 - no plot SPCTR - Spectral Plot with Ncar Graphics - 0 - no plot VORTX - Vortex Plot with Ncar Graphics - 0 - no plot VIS5D - Vis5d output (working in parallel), see "param.v5d" f) The following parameters defines default I/O binary mode used for model restart and postprocessing, and precompiler directives for use with additional output formats.

NETCDF 2 - single real precision tape NETCDF 1 - double real precision tape 4) The following section contains creates parameters files used to control model physical and numerical implementation. See source code for detailed description of each file.

param.nml - grid size parameters and main model option param.ior - parameters used in Semi-Lagrangian option param.icw - controls lateral open boundaries (keep default) vrtstr.fnc - default vertical stretching function msg.inc - setting used in parallel mode (defined partially in section 6) param.v5d - parameters used by Vis5D output

blockdata.anelas - main set of parameters used in physical parameterizations blockdata.gks - control options used with NcarGraphics

5) The following section creates main Fortran source code named ?src.F? which is ready to use with Fotran 77/90 compilers.

At first the environmental variables are utomatically rewritten into precompiler directives: goto SET_PRECOMPILATOR RETURN_SET_PRECOMPILATOR: ..... SET_PRECOMPILATOR: ..... rm -f src.F touch src.F ########################## if ($MESSG == SCH) then cat >> src.F << '\eof' #define PARALLEL 1 #define PVM_IO 0 '\eof' endif if ($MESSG == MPI) then cat >> src.F << '\eof' #define PARALLEL 2 #define PVM_IO 0 '\eof' endif if ($MESSG == ONE) then cat >> src.F << '\eof' #define PARALLEL 0 #define PVM_IO 0 '\eof' endif .... goto RETURN_SET_PRECOMPILATOR Following the automatic setup the following set of precompiler directives should be manually adjusted: RETURN_SET_PRECOMPILATOR: cat >> src.F << '\eof' C############################################################################ #define SEMILAG 0 /* 0=EULERIAN, 1=SEMI-LAGRANGIAN */ #define MOISTMOD 0 /* 0=DRY, 1=WARM MOIST, 2=ICE A+B (NOT READY YET) */ #define J3DIM 1 /* 0=2D MODEL, 1=3D MODEL */ #define SGS 0 /* 0=NO DIFFUSION, 1=OLD DISSIP, 2=DISSIP AMR */ #define ISEND 2 /* 1=SENDRECV, 2=IRECV+SEND, 3=ISEND+IRECV */ #define PRECF 0 /* 0=PRECON_DF or PRECON_BCZ, 1=PRECON_F */ #define IORFLAG 1 /* 0=tape in serial mode, 1=tape in parallel mode */ #define IOWFLAG 1 /* 0=tape in serial mode, 1=tape in parallel mode */ #define IORTAPE 3 /* 1=default, 2=single, 3=double precision */ #define IOWTAPE 3 /* 1=default, 2=single, 3=double precision */ #define NETCDFD 1 /* 2=single precision, 1=double precision */ #define TIMEPLT 1 /* 1=time counts 6) This section is responsible for create additional external files required by model and update previous parameter files with definitions typically not requiring user input or additional changes.

tempr.def/tempw.def - contain definitions for temporary arrays in I/O routines msg.lnk - location of MPI header files and arrays used in parallel mode msg.lnp - section used with PVM MPP (obsolete) param.tfr - array definitions for external data used with EULAG forcings msg.inc - array definitions in parallel mode conrec.gks - NcarGraphics module for contour plots velvct.gks - NcarGraphics module for vector plots colorpl.gks - NcarGraphics module for color plots cfftpack.f - Fast Fourier analysis v5d43.c - Vis5D I/O libraries v5d43.h - Vis5D I/O library header 7) The following section is used to check know common restrictions with different options. This section may help in correct some mistakes in selected options.

8) The following section print out most of the selected options to the user window.

9) Section for compile Vis5d libraries if VIS5D option is set to 1.

10) This section creates batch scripts used for compile model binaries. The user selected options are written into external script file and the script is executed at the current node assuming the compiler is available over there.

set NETCDF_INCLUDE = '/opt/netcdf/include'

set NETCDF_LIB = '/opt/netcdf/lib'

cp ${NETCDF_INCLUDE}/netcdf.inc .

cp ${NETCDF_INCLUDE}/typesizes.mod .

cp ${NETCDF_INCLUDE}/netcdf.mod .

if ($NPE == 1) then

set PGF90 = '/opt/pgi/bin/pgf90'

set PGF90 = '/opt/pgi/bin/pgf90 -fpic -tp core2-64'

if ( $NCARG == 1 ) then # COMPILE WITH NCAR GRAPHICS

if ( $WORD == 4 ) then

echo "${PGF90} -c -O2 -Mcray=pointer -Mnoframe -Kieee src.F"

${PGF90} -c -O2 -Mcray=pointer -Mnoframe -Kieee src.F

echo "${PGF90} src.o $OBJECTS -O2 -L/opt/pgi/linux86/lib -lnetcdff -lnetcdf"

${PGF90} src.o $OBJECTS -O2 -L/opt/pgi/linux86/lib -L${NETCDF_LIB} -lnetcdff -lnetcdf \

-L/usr/local/ncarg/lib -L/usr/X11R6/lib -L/usr/dt/lib \

-lncarg -lncarg_gks -lncarg_c -lX11 -lm -lf2c

else

echo 'NCAR_GRAPHICS with double prec. not implemented'

echo 'If you need a double precision version have your '

echo 'system administrator contact ncargfx@ncar.ucar.edu'

endif

else # COMPILE WITHOUT NCAR GRAPHICS

if ( $WORD == 4 ) then

echo "${PGF90} -c -O2 -Mcray=pointer -Mnoframe -Kieee src.F"

${PGF90} -c -O2 -Mcray=pointer -Mnoframe -Kieee src.F

echo "${PGF90} src.o $OBJECTS -O2 -L/opt/pgi/linux86/lib -lnetcdff -lnetcdf"

${PGF90} src.o $OBJECTS -O2 -L/opt/pgi/linux86/lib -L${NETCDF_LIB} -lnetcdff -lnetcdf

endif

if ( $WORD == 8 ) then

echo "CURRENTLY NOT AVAILABLE FOR DOUBLE PRECISION IMPLEMETATION ON LINUXCLUSTERS"

echo "${PGF90} -c -O2 -r8 -Mcray=pointer -Mnoframe -Kieee src.F"

${PGF90} -c -O2 -r8 -Mcray=pointer -Mnoframe -Kieee src.F

echo "${PGF90} src.o $OBJECTS -O2 -r8 -L/opt/pgi/linux86/lib -lnetcdff -lnetcdf"

${PGF90} src.o $OBJECTS -O2 -r8 -L/opt/pgi/linux86/lib -L${NETCDF_LIB} -lnetcdff -lnetcdf

endif

endif

else

set PGF90 = '/opt/mpich/bin/mpif90'

if ( $WORD == 4 ) then

echo "${PGF90} -c -O2 -Mcray=pointer -Mnoframe -Kieee src.F"

${PGF90} -c -O2 -Mcray=pointer -Mnoframe -Kieee src.F

echo "${PGF90} src.o $OBJECTS -O2 -L/opt/pgi/linux86/lib -L/opt/mpich/lib-L${NETCDF_LIB} -

lfmpich -lmpich -lnetcdff -lnetcdf"

${PGF90} src.o $OBJECTS -O2 -L/opt/pgi/linux86/lib -L/opt/mpich/lib -L${NETCDF_LIB} -lfmpi

ch -lmpich -lnetcdff -lnetcdf

endif

if ( $WORD == 8 ) then

echo "CURRENTLY NOT AVAILABLE FOR DOUBLE PRECISION IMPLEMETATION ON LINUXCLUSTERS"

echo "${PGF90} -c -O2 -r8 -Mcray=pointer -Mnoframe -Kieee src.F"

${PGF90} -c -O2 -r8 -Mcray=pointer -Mnoframe -Kieee src.F

echo "${PGF90} src.o $OBJECTS -O2 -r8 -L/opt/pgi/linux86/lib -L/opt/mpich/lib-L${NETCDF_LI

B} -lfmpich -lmpich -lnetcdff -lnetcdf"

${PGF90} src.o $OBJECTS -O2 -r8 -L/opt/pgi/linux86/lib -L/opt/mpich/lib -L${NETCDF_LIB} -l

fmpich -lmpich -lnetcdff -lnetcdf

endif

endif

endif

11) Section responsible for model execution. This section is controlled by MACHINE and BATCH options set at the beginning. In batch mode two batch files are created at NCAR computers which contain options for serial (run_serial) and parallel (run_paral) job modes. See the above files for detailed information.

12) The last section may be used to for postprocessing but only if interactive mode is used (BATCH = 0) or the whole script us submitted to the queue with options described in paragraph 2). If the batch mode is used (BARCH =1) the postprocessing should be done either within run_paral/run_serial files or offline.

|