This web page is intended to serve as a guide through the practice exercises of this

tutorial. Exercises are split into seven main sections, each of which focuses on

a particular aspect of using the MPAS-Atmosphere model.

While going through this practice guide, you may find it helpful to have a copy

of the MPAS-Atmosphere Users' Guide available in another window.

Click here

to open the Users' Guide in a new window.

In case you would like to refer to any of the lecture slides from previous days,

you can open the Tutorial Agenda in another window.

You can proceed through the sections of this practical guide at your own pace. It is highly recommended

to go through the exercises in order, since later exercises may require the output of earlier ones.

Clicking the grey headers will expand each section or subsection.

The practical exercises in this tutorial have been tailored to work on

the Cheyenne system.

Cheyenne is an HPC cluster that provides most of the libraries needed by MPAS and its pre- and post-processing

tools through modules. In general, before compiling and running MPAS-Atmosphere on your own system,

you will need an MPI-2 implementation as well as the NetCDF-4, PnetCDF, and PIO libraries.

Beginning with MPAS v8.0, a built-in alternative to the PIO library is available: the Simple MPAS

I/O Layer (SMIOL). SMIOL requires just the PnetCDF library, and there is no need for the NetCDF-4

and PIO libraries when compiling MPAS itself. In this tutorial, we will be using MPAS with the SMIOL

library.

Before going through any of the exercises in subsequent sections, we will need to run

several commands to prepare our environment. Firstly, we will purge all loaded modules to begin

from a known starting point (with no modules loaded); then, we will load several modules to give

access to the GNU compilers as well as an MPI implementation:

$ module purge

$ module load ncarenv/1.3

$ module load gnu/10.1.0

$ module load ncarcompilers/0.5.0

$ module load mpt/2.25

Next, we will load modules for additional libraries needed by the MPAS-Atmosphere model.

Besides the Parallel-NetCDF module (pnetcdf/1.12.2) needed

by MPAS itself, the netcdf-mpi/4.8.1 module is being loaded

to support compilation of pre- and post-processing tools and so that we have access to

the standard ncdump program for showing the contents

of NetCDF files.

$ module load pnetcdf/1.12.2

$ module load netcdf-mpi/4.8.1

At this point, running module list should print the following:

$ module list

Currently Loaded Modules:

1) ncarenv/1.3 3) ncarcompilers/0.5.0 5) pnetcdf/1.12.2

2) gnu/10.1.0 4) mpt/2.25 6) netcdf-mpi/4.8.1

Post-processing exercises will need Python along with the NumPy, PyNGL, NetCDF4,

and miscellaneous other Python packages. On Cheyenne, these are available in

a Conda environment named 'npl'. The npl environment also provides the NCAR Command

Language (NCL). We can load the conda module and activate the npl environment with

the following commands:

$ module load conda

$ conda activate npl

Some Python plotting scripts that will be used in this tutorial rely on

additional Python code that is located in a non-standard location.

In order for these plotting scripts to work, we'll need to set our

PYTHONPATH environment variable using the following command:

$ export PYTHONPATH=/glade/p/mmm/wmr/mpas_tutorial/python_scripts

Additionally, we can load a module to provide access to the 'ncview' tool:

$ module load ncview

When running limited-area simulations, we will need to use Metis to create

our own graph partition files. We can add the gpmetis command

to our PATH with the following:

$ export PATH=/glade/p/mmm/wmr/mpas_tutorial/metis-5.1.0-gcc9.1.0/bin:${PATH}

IMPORTANT NOTE:The 'npl' Conda environment on Cheyenne modifies

our PATH such that the wrong MPI compiler wrappers appear

first. To remedy this, we can simply reload the 'gnu' module as a final step:

$ module reload gnu

If you've logged out of Cheyenne and would like a quick list of commands to set up your

environment again after logging back in, you can copy and paste the following commands

into your terminal:

module purge

module load ncarenv/1.3

module load gnu/10.1.0

module load ncarcompilers/0.5.0

module load mpt/2.25

module load pnetcdf/1.12.2

module load netcdf-mpi/4.8.1

module load conda

conda activate npl

export PYTHONPATH=/glade/p/mmm/wmr/mpas_tutorial/python_scripts

module load ncview

export PATH=/glade/p/mmm/wmr/mpas_tutorial/metis-5.1.0-gcc9.1.0/bin:${PATH}

module reload gnu

We will run all of the practical exercises in this tutorial in our scratch directories,

i.e., /glade/scratch/$USER. To keep the MPAS tutorial exercises separate

from other work, we'll create a sub-directory named "mpas_tutorial":

$ mkdir /glade/scratch/$USER/mpas_tutorial

Running jobs on Cheyenne requires the submission of a job script to a batch queueing system,

which will allocate requested computing resources to your job when they become available.

In general, it's best to avoid running any compute-intensive jobs on the login nodes, and

the practical instructions to follow will guide you in the process of submitting jobs when

necessary.

As a first introduction to running jobs on Cheyenne, there are several key commands worth

noting:

- qcmd -A UMMM0004 - This command runs the specified command on a batch node under

the UMMM0004 project for this tutorial

- qsub - This command submits a job script, which describes a job

to be run on one or more batch nodes; it offers increased

control over running a simple command with qcmd

- qstat -u $USER - This command tells you the status of your pending and running jobs.

Note that you may need to wait about 30 seconds for a recently submitted job to show up.

- qdel - This command deletes a queued or running job

At various points in the practical exercises, we'll need to submit jobs to Cheyenne's queueing system

using the qsub command, and after doing so, we may often check on the status

of the job with the qstat command, monitoring the log files produced by the job

once we see that the job has begun to run.

With your shell environment set up as described in this section, you're ready to begin with the

practical exercises of this tutorial!

In this section, our goal is to obtain a copy of the MPAS source code directly from the MPAS

GitHub repository. We'll then compile both the init_atmosphere_model

and atmosphere_model programs before using the former to begin

the processing of time-invariant, terrestrial fields for use in a real-data simulation. While

this processing is taking place, in another terminal window

(don't forget to set up your environment!)

we can practice preparing idealized initial conditions.

Since our default login shell is bash, all shell commands

throughout this tutorial will be written for bash. However, if you prefer a different

shell and don't mind mentally translating, e.g., export commands to

setenv commands, then feel free to switch shells.

As described in the lectures, the MPAS code is distributed directly from the GitHub

repository where it is developed. While it's possible to navigate to

https://github.com/MPAS-Dev/MPAS-Model

and obtain the code by clicking on the "Releases" tab, it's much faster to clone

the repository directly on the command-line. After changing to our /glade/scratch/${USER}/mpas_tutorial directory, we can

make a clone of the MPAS-Model repository:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ git clone https://github.com/MPAS-Dev/MPAS-Model.git

Cloning the MPAS-Model repository may take a few seconds, and at the end of the process,

the output to the terminal should look something like the following:

Cloning into 'MPAS-Model'...

remote: Enumerating objects: 64819, done.

remote: Counting objects: 100% (29/29), done.

remote: Compressing objects: 100% (13/13), done.

remote: Total 64819 (delta 16), reused 25 (delta 16), pack-reused 64790

Receiving objects: 100% (64819/64819), 35.63 MiB | 20.78 MiB/s, done.

Resolving deltas: 100% (48230/48230), done.

Updating files: 100% (1540/1540), done.

We should now have an MPAS-Model directory:

$ ls -l MPAS-Model

total 36

-rw-r--r-- 1 duda ncar 3131 Apr 7 21:01 INSTALL

-rw-r--r-- 1 duda ncar 2311 Apr 7 21:01 LICENSE

-rw-r--r-- 1 duda ncar 27607 Apr 7 21:01 Makefile

-rw-r--r-- 1 duda ncar 2555 Apr 7 21:01 README.md

drwxr-xr-x 14 duda ncar 4096 Apr 7 21:01 src

drwxr-xr-x 4 duda ncar 4096 Apr 7 21:01 testing_and_setup

That's it! We now have a copy of the latest release of the MPAS source code.

As mentioned in the lectures, there are two "cores" that need to be compiled from

the same MPAS source code: the init_atmosphere core and

the atmosphere core.

Before beginning the compilation process, it's worth verifying that we have

MPI compiler wrappers in our path, and that environment variable pointing to

the Parallel-NetCDF library is set:

$ which mpif90

/glade/u/apps/ch/opt/ncarcompilers/0.5.0/gnu/10.1.0/mpi/mpif90

$ which mpicc

/glade/u/apps/ch/opt/ncarcompilers/0.5.0/gnu/10.1.0/mpi/mpicc

$ echo $PNETCDF

/glade/u/apps/ch/opt/pnetcdf/1.12.2/mpt/2.25/gnu/10.1.0/

If all of the above commands were successful, our shell environment should be

sufficient to allow the MPAS cores to be compiled.

We'll begin by compiling the init_atmosphere core, producing an executable

named init_atmosphere_model.

IMPORTANT NOTE:Before compiling on Cheyenne specifically, there's an important point that needs explanation!

In order to avoid saturating the Cheyenne login nodes with compilation processes and negatively impacting other

users, we will run our compilation command on a batch node with the special qcmd command.

The qcmd command launches the specified command on a batch node and returns when the command

has finished. So, although you would normally not use "qcmd" when compiling MPAS on other systems, for this tutorial

we will prefix the usual compilation command with qcmd -A UMMM0004 -- (where UMMM0004 is

the computing project we're working under).

After changing to the MPAS-Model directory

$ cd MPAS-Model

we can issue the following command to build the init_atmosphere core with

the gfortran compiler:

$ qcmd -A UMMM0004 -- make -j4 gfortran CORE=init_atmosphere PRECISION=single

Note the inclusion of PRECISION=single on the build command;

the default is to build MPAS cores as double-precision executables, but single-precision

executables require less memory, produce smaller output files, run faster, and — at least

for MPAS-Atmosphere — seem to produce results that are no worse than double-precision executables.

In the build command, we have also added the -j4 flag to tell make

to use four tasks to build the code; this should reduce the time to compile the init_atmosphere_model

executable significantly!

After issuing the make command, above, compilation should take just a couple of

minutes, and if the compilation was successful, the end of the build process should have produced

messages like the following:

*******************************************************************************

MPAS was built with default single-precision reals.

Debugging is off.

Parallel version is on.

Papi libraries are off.

TAU Hooks are off.

MPAS was built without OpenMP support.

MPAS was built with .F files.

The native timer interface is being used

Using the SMIOL library.

*******************************************************************************

If compilation of the init_atmosphere core was successful, we should also have an executable

file named init_atmosphere_model:

$ ls -l

total 2006

drwxr-xr-x 2 duda ncar 4096 Apr 26 20:32 default_inputs

-rwxr-xr-x 1 duda ncar 2014592 Apr 26 20:32 init_atmosphere_model

-rw-r--r-- 1 duda ncar 3131 Apr 26 20:26 INSTALL

-rw-r--r-- 1 duda ncar 2311 Apr 26 20:26 LICENSE

-rw-r--r-- 1 duda ncar 27864 Apr 26 20:26 Makefile

-rw-r--r-- 1 duda ncar 1379 Apr 26 20:32 namelist.init_atmosphere

-rw-r--r-- 1 duda ncar 2555 Apr 26 20:26 README.md

drwxr-xr-x 14 duda ncar 4096 Apr 26 20:32 src

-rw-r--r-- 1 duda ncar 920 Apr 26 20:32 streams.init_atmosphere

drwxr-xr-x 4 duda ncar 4096 Apr 26 20:26 testing_and_setup

Note, also, that default namelist and streams files for the init_atmosphere core

have also been generated as part of the compilation process: these are the files named

namelist.init_atmosphere and streams.init_atmosphere.

Now, we're ready to compile the atmosphere core. If we try this without cleaning any

of the common infrastructure code, first, e.g.,

$ make gfortran CORE=atmosphere PRECISION=single

we would get an error like the following:

*******************************************************************************

The MPAS infrastructure is currently built for the init_atmosphere_model core.

Before building the atmosphere core, please do one of the following.

To remove the init_atmosphere_model_model executable and clean the MPAS infrastructure, run:

make clean CORE=init_atmosphere_model

To preserve all executables except atmosphere_model and clean the MPAS infrastructure, run:

make clean CORE=atmosphere

Alternatively, AUTOCLEAN=true can be appended to the make command to force a clean,

build a new atmosphere_model executable, and preserve all other executables.

*******************************************************************************

After compiling one MPAS core, we need to clean up the shared infrastructure before

compiling a different core. We can clean all parts of the infrastructure that are

needed by the atmosphere core by running the following command:

$ make clean CORE=atmosphere

IMPORTANT NOTE:Because we are compiling on the batch nodes of Cheyenne, and because the batch

nodes do not have internet access, we need to manually obtain lookup tables used by physics schemes when compiling

on Cheyenne with qcmd. In general, the next step is not needed when compiling on, e.g., login nodes that have

internet access.

Before launching the model compilation on a batch node with qcmd, we can manually obtain physics lookup tables

with the following commands from our top-level MPAS-Model directory:

$ cd src/core_atmosphere/physics

$ ./checkout_data_files.sh

$ cd ../../..

Having manually obtain physics lookup tables, we can proceed to compile the atmosphere

core in single-precision with:

$ qcmd -A UMMM0004 -- make -j4 gfortran CORE=atmosphere PRECISION=single

The compilation of the atmosphere_model executable can take

several minutes or longer to complete (depending on which compiler is used).

Similar to the compilation of the init_atmosphere core, a successful compilation

of the atmosphere core should give the following message:

*******************************************************************************

MPAS was built with default single-precision reals.

Debugging is off.

Parallel version is on.

Papi libraries are off.

TAU Hooks are off.

MPAS was built without OpenMP support.

MPAS was built with .F files.

The native timer interface is being used

Using the SMIOL library.

*******************************************************************************

Now, our MPAS-Model directory should contain an executable file named atmosphere_model,

as well as default namelist.atmosphere and streams.atmosphere

files:

$ ls -lL

total 33018

-rwxr-xr-x 1 duda ncar 5644864 Apr 26 20:37 atmosphere_model

-rwxr-xr-x 1 duda ncar 209256 Apr 26 20:36 build_tables

-rw-r--r-- 1 duda ncar 20580056 Apr 26 20:34 CAM_ABS_DATA.DBL

-rw-r--r-- 1 duda ncar 18208 Apr 26 20:34 CAM_AEROPT_DATA.DBL

drwxr-xr-x 2 duda ncar 4096 Apr 26 20:37 default_inputs

-rw-r--r-- 1 duda ncar 261 Apr 26 20:34 GENPARM.TBL

-rwxr-xr-x 1 duda ncar 2014592 Apr 26 20:32 init_atmosphere_model

-rw-r--r-- 1 duda ncar 3131 Apr 26 20:26 INSTALL

-rw-r--r-- 1 duda ncar 29820 Apr 26 20:34 LANDUSE.TBL

-rw-r--r-- 1 duda ncar 2311 Apr 26 20:26 LICENSE

-rw-r--r-- 1 duda ncar 27864 Apr 26 20:26 Makefile

-rw-r--r-- 1 duda ncar 1774 Apr 26 20:37 namelist.atmosphere

-rw-r--r-- 1 duda ncar 1379 Apr 26 20:32 namelist.init_atmosphere

-rw-r--r-- 1 duda ncar 543744 Apr 26 20:34 OZONE_DAT.TBL

-rw-r--r-- 1 duda ncar 536 Apr 26 20:34 OZONE_LAT.TBL

-rw-r--r-- 1 duda ncar 708 Apr 26 20:34 OZONE_PLEV.TBL

-rw-r--r-- 1 duda ncar 2555 Apr 26 20:26 README.md

-rw-r--r-- 1 duda ncar 847552 Apr 26 20:34 RRTMG_LW_DATA

-rw-r--r-- 1 duda ncar 1694976 Apr 26 20:34 RRTMG_LW_DATA.DBL

-rw-r--r-- 1 duda ncar 680368 Apr 26 20:34 RRTMG_SW_DATA

-rw-r--r-- 1 duda ncar 1360572 Apr 26 20:34 RRTMG_SW_DATA.DBL

-rw-r--r-- 1 duda ncar 4399 Apr 26 20:34 SOILPARM.TBL

drwxr-xr-x 14 duda ncar 4096 Apr 26 20:37 src

-rw-r--r-- 1 duda ncar 1203 Apr 26 20:37 stream_list.atmosphere.diagnostics

-rw-r--r-- 1 duda ncar 927 Apr 26 20:37 stream_list.atmosphere.output

-rw-r--r-- 1 duda ncar 9 Apr 26 20:37 stream_list.atmosphere.surface

-rw-r--r-- 1 duda ncar 1571 Apr 26 20:37 streams.atmosphere

-rw-r--r-- 1 duda ncar 920 Apr 26 20:32 streams.init_atmosphere

drwxr-xr-x 4 duda ncar 4096 Apr 26 20:26 testing_and_setup

-rw-r--r-- 1 duda ncar 22986 Apr 26 20:34 VEGPARM.TBL

Note, also, the presence of files named stream_list.atmosphere.*. These are lists

of output fields that are referenced by the streams.atmosphere file.

The new files like CAM_ABS_DATA.DBL, LANDUSE.TBL, RRTMG_LW_DATA, etc. are look-up tables and other data files used by physics schemes in MPAS-Atmosphere. These files

should all be symbolic links to files in the src/core_atmosphere/physics/physics_wrf/files/ directory.

If we have init_atmosphere_model and atmosphere_model

executables, then compilation of both MPAS-Atmosphere cores was successful, and we're ready to proceed

to the next sub-section.

For our first MPAS simulation in this tutorial, we'll use a quasi-uniform 240-km mesh. Since

we'll later be working with a variable-resolution mesh, it will be helpful to maintain separate

sub-directories for these two simulations.

To begin processing of the static, terrestrial fields for the 240-km quasi-uniform mesh, let's

change to our /glade/scratch/${USER}/mpas_tutorial directory and make a new sub-directory named 240km_uniform:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ mkdir 240km_uniform

$ cd 240km_uniform

We can find a copy of the 240-km quasi-uniform mesh at /glade/p/mmm/wmr/mpas_tutorial/meshes/x1.10242.grid.nc.

To save disk space, we'll symbolically link this file into our directory:

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/meshes/x1.10242.grid.nc .

We will also need the init_atmosphere_model executable, as well as copies

of the namelist.init_atmosphere and streams.init_atmosphere

files. We'll make a symbolic link to the executable, and copies of the other files (since we will be changing them):

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/init_atmosphere_model .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/namelist.init_atmosphere .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/streams.init_atmosphere .

Before running the init_atmosphere_model program, we'll need to set

up the namelist.init_atmosphere file as described in the lectures.

With your preferred editor, edit the highlighted options in the default

namelist.init_atmosphere file so that they match the following:

&nhyd_model

config_init_case = 7

/

&data_sources

config_geog_data_path = '/glade/p/mmm/wmr/mpas_tutorial/mpas_static/'

config_landuse_data = 'MODIFIED_IGBP_MODIS_NOAH'

config_topo_data = 'GMTED2010'

config_vegfrac_data = 'MODIS'

config_albedo_data = 'MODIS'

config_maxsnowalbedo_data = 'MODIS'

config_supersample_factor = 3

/

&preproc_stages

config_static_interp = true

config_native_gwd_static = true

config_vertical_grid = false

config_met_interp = false

config_input_sst = false

config_frac_seaice = false

/

Note, in particular, the setting of the path to the geographical data sets, and the

settings to enable only the processing of static data and the gravity wave drag ("gwd")

static fields.

After editing the namelist.init_atmosphere file, it will also be necessary to tell the

init_atmosphere_model program the name of our input grid file, as well as the name of the

"static" output file that we would like to create. We do this by editing the streams.init_atmosphere

file, where we first set the name of the grid file for the "input" stream:

<immutable_stream name="input"

type="input"

filename_template="x1.10242.grid.nc"

input_interval="initial_only"/>

and then set the name of the static file to be created for the "output" stream:

<immutable_stream name="output"

type="output"

filename_template="x1.10242.static.nc"

packages="initial_conds"

output_interval="initial_only" />

When interpolating geographical fields to a mesh, the "surface" and "lbc" streams can be ignored (but, you should

not delete the "surface" or "lbc" streams, or the init_atmosphere_model program will complain).

After editing the streams.init_atmosphere file, we can begin the processing

of the static, time-invariant fields for the 240-km quasi-uniform mesh.

Rather than running the init_atmosphere_model program on a batch node with

qcmd, we will use a job script to run the program, returning a command prompt

to us where we can monitor the progress of the job. To begin, we'll copy a prepared job script to our

working directory:

$ cp /glade/p/mmm/wmr/mpas_tutorial/job_scripts/init_real.pbs .

If you're interested in doing so, you can take a look at the init_real.pbs job script.

Once you're ready, you can submit the script to the queueing system with the qsub command:

$ qsub init_real.pbs

Now, we need to wait for our job to start running; we can check on its status every minute or so with

the qstat command:

$ qstat -u $USER

Once we see that our job is running — indicated by an "R" in the second-to-last column, e.g,

$ qstat -u $USER

chadmin1.ib0.cheyenne.ucar.edu:

Req'd Req'd Elap

Job ID Username Queue Jobname SessID NDS TSK Memory Time S Time

--------------- -------- -------- ---------- ------ --- --- ------ ----- - -----

7497852.chadmin duda regular 240km_stat 25299 1 1 -- 02:00 R 00:00

we can check on the progress of the init_atmosphere_model program

by following the messages written to the log.init_atmosphere.0000.out

file:

$ tail -f log.init_atmosphere.0000.out

We should expect to see lines of output similar to the following being printed every few seconds:

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/28801-30000.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/30001-31200.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/31201-32400.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/32401-33600.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/33601-34800.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/34801-36000.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/36001-37200.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/37201-38400.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/38401-39600.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/39601-40800.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/40801-42000.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/42001-43200.03601-04800

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/00001-01200.04801-06000

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/01201-02400.04801-06000

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/02401-03600.04801-06000

/glade/p/mmm/wmr/mpas_tutorial/mpas_static/topo_gmted2010_30s/03601-04800.04801-06000

The processing of the static, time-invariant fields will take some time — perhaps 30 – 45 minutes or so.

If lines similar to the above are being periodically written, we can kill the tail

process with CTRL-C before proceeding to the next sub-section, where we will create

idealized initial conditions while the static, terrestrial fields are interpolated.

IMPORTANT NOTE: If lines of output are not being periodically written to the terminal

when running the tail command as described above, look for a file

named log.init_atmosphere.0000.err and detemine what went wrong before

going on to the next sub-section! Exercises in the second section of this tutorial will require

the successful processing of static, time-invariant fields!

While the init_atmosphere_model program is processing static,

time-invariant fields for a real-data simulation in the 240km_uniform

directory, we can try initializing and running an idealized simulation in a separate directory.

The init_atmosphere_model program supports the creation of idealized

initial conditions for the following cases:

- 3-d baroclinic wave on the sphere

- 3-d supercell thunderstorm on a doubly-periodic Cartesian plane

- 2-d mountain wave in the xz-plane

For each of these idealized cases, prepared input files are available on the MPAS-Atmosphere

download page. We'll go through the process of creating initial conditions for the idealized

supercell case; the general process is similar for the other two cases.

The prepared input files for MPAS-Atmosphere idealized cases may be found by going to

the MPAS Homepage and clicking

on the "MPAS-Atmosphere download" link at the left. Then, clicking on the "Configurations

for idealized test cases" link will take you to the download links for idealized cases.

Although the input files for these idealized cases can be downloaded through the web page,

we can also download, e.g., the supercell input files with wget

once we know the URL. Beginning from our /glade/scratch/${USER}/mpas_tutorial directory, we can download the supercell

input file archive and unpack it with:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ wget http://www2.mmm.ucar.edu/projects/mpas/test_cases/v7.0/supercell.tar.gz

$ tar xzvf supercell.tar.gz

After changing to the resulting supercell directory,

the README file will give an overview of how to initialize and

run the test case. The key points are that we need to symbolically link both

the init_atmosphere_model and atmosphere_model

executables into the supercell directory:

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/init_atmosphere_model .

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/atmosphere_model .

Unlike as described in the README file, however, we will not run

the init_atmosphere_model and atmosphere_model

programs directly on the command-line.

We will run the init_atmosphere_model program through qcmd with:

$ qcmd -A UMMM0004 -- ./init_atmosphere_model

Once the initial conditions have been created as described in the README

file, it's helpful to verify that there were no errors; the end of

the log.init_atmosphere.0000.out

file should look something like the following:

-----------------------------------------

Total log messages printed:

Output messages = 304

Warning messages = 13

Error messages = 0

Critical error messages = 0

-----------------------------------------

Assuming there were no errors, we can run the simulation with 32 processors by first copying

a prepared job script to our working directory and submitting the script with qsub:

$ cp /glade/p/mmm/wmr/mpas_tutorial/job_scripts/supercell.pbs .

$ qsub supercell.pbs

As with the static field interpolation, we need to wait for our job to start running. We can check on its

status every minute or so with the qstat command:

$ qstat -u $USER

Once we see that our job is running, we can move on to the next section.

The simulation may take about 10 minutes to run with 32 MPI tasks. During one of the later

practical section, if you would like to revisit the simulation to see the results, running

the supercell.ncl NCL script should produce plots

of the time evolution of several model fields:

$ ncl supercell.ncl

Having compiled both the init_atmosphere and atmosphere

cores, and also having interpolated time-invariant, terrestrial fields to create a "static" file for

real-data simulations, we'll interpolate atmospheric and land-surface fields to create complete initial

conditions for an MPAS simulation in this section. We'll also process 10 days' worth of SST and sea-ice

data to update these fields periodically as the model runs. Then, we'll start a five-day simulation,

which will take around an hour to complete. Once the model has started running, we can use any extra time

to compile the convert_mpas utility program.

If you've reached this point and the static field interpolation from Section 1.3 has not yet

finished, you can first go through Section 2.4, before returning to Sections 2.1 - 2.3 after

the static field interpolation has completed.

In an earlier section, we started the process of interpolating static geographical

fields to the 240-km, quasi-uniform mesh in the 240km_uniform

directory. If this interpolation was successful, this directory should contain two new

files: log.init_atmosphere.0000.out and

x1.10242.static.nc.

$ cd /glade/scratch/${USER}/mpas_tutorial/240km_uniform

$ ls -l log.init_atmosphere.0000.out x1.10242.static.nc

The end of the log.init_atmosphere.0000.out file should show

that there were no errors:

-----------------------------------------

Total log messages printed:

Output messages = 2915

Warning messages = 10

Error messages = 0

Critical error messages = 0

-----------------------------------------

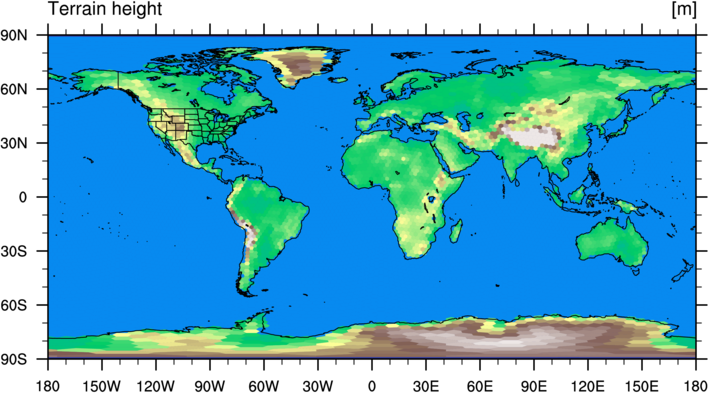

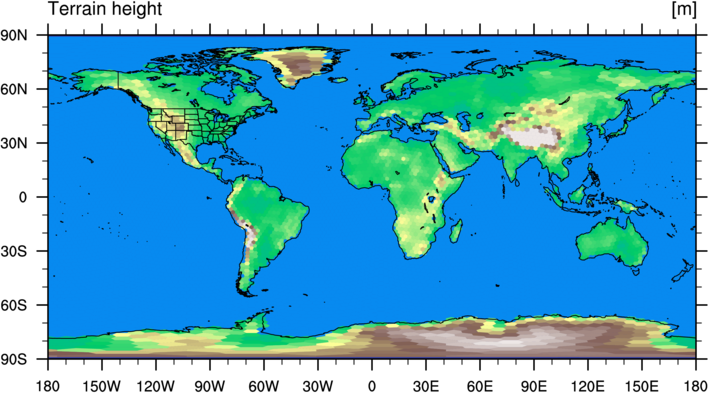

We can also make a plot of the terrain elevation field in the x1.10242.static.nc

file with a Python script named plot_terrain.py. We'll discuss the use of Python

and NCL scripts for visualization in a later section, so for now we can simply execute the following

commands to plot the terrain field:

$ cp /glade/p/mmm/wmr/mpas_tutorial/python_scripts/plot_terrain.py .

$ ./plot_terrain.py x1.10242.static.nc

$ display terrain.png

Running the script may take 10 or 15 seconds, but the result should be a figure that looks

like the following:

Now that we have convinced ourselves that the processing of static, geographical fields was successful,

we can proceed to interpolate atmosphere and land-surface initial conditions for our 240-km simulation.

Generally, it is necessary to use the ungrib component of the WRF Pre-Processing System to prepare

atmospheric datasets in the intermediate format used by MPAS. For the purposes of this tutorial,

we will simply assume that these data have already been processed with the ungrib program in

/glade/p/mmm/wmr/mpas_tutorial/met_data/.

To interpolate the ERA5 analysis valid at 0000 UTC on 10 September 2014 to our 240-km mesh, we will

symbolically link the ERA5:2014-09-10_00 file to our working directory:

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/met_data/ERA5:2014-09-10_00 .

Then, we will need to edit the namelist.init_atmosphere as described in the lectures

to instruct the init_atmosphere_model program to interpolate the ERA5 data to our mesh.

The critical items to set in the namelist.init_atmosphere file are:

&nhyd_model

config_init_case = 7

config_start_time = '2014-09-10_00:00:00'

/

&dimensions

config_nvertlevels = 41

config_nsoillevels = 4

config_nfglevels = 38

config_nfgsoillevels = 4

/

&data_sources

config_met_prefix = 'ERA5'

config_use_spechumd = false

/

&vertical_grid

config_ztop = 30000.0

config_nsmterrain = 1

config_smooth_surfaces = true

config_dzmin = 0.3

config_nsm = 30

config_tc_vertical_grid = true

config_blend_bdy_terrain = false

/

&interpolation_control

config_extrap_airtemp = 'lapse-rate'

/

&preproc_stages

config_static_interp = false

config_native_gwd_static = false

config_vertical_grid = true

config_met_interp = true

config_input_sst = false

config_frac_seaice = true

/

You may find it easier to copy the default namelist.init_atmosphere file from

/glade/scratch/${USER}/mpas_tutorial/MPAS-Model/namelist.init_atmosphere before making the edits highlighted

above.

When editing the namelist.init_atmosphere file, the key changes are to

the starting time for our real-data simulation, the prefix of the intermediate file that

contains the ERA5 atmospheric and land-surface fields, and the pre-processing stages that will be run.

For this simulation, we'll also use just 41 vertical layers in the atmosphere, rather than the default

of 55, so that the simulation will run faster.

After editing the namelist.init_atmosphere file, we will also need to set

the name of the input file in the streams.init_atmosphere file to

the name of the "static" file that we just produced:

<immutable_stream name="input"

type="input"

filename_template="x1.10242.static.nc"

input_interval="initial_only"/>

and we will also need to set the name of the output file, which will be the MPAS real-data initial

conditions file:

<immutable_stream name="output"

type="output"

filename_template="x1.10242.init.nc"

packages="initial_conds"

output_interval="initial_only" />

Once we've made the above changes to the namelist.init_atmosphere and

streams.init_atmosphere files, we can run the init_atmosphere_model

program by submitting our init_real.pbs script again:

$ qsub init_real.pbs

As before, we can check on the status of our job periodically by running

$ qstat -u $USER

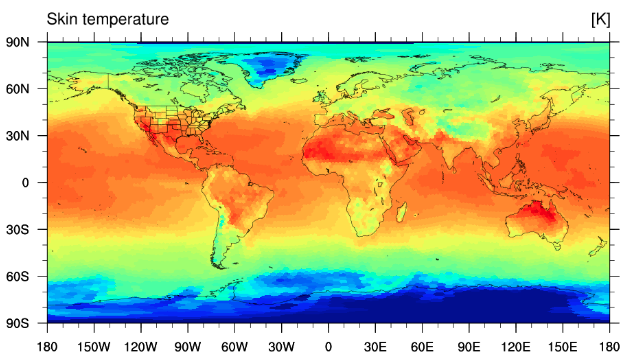

After it begins to run, the program should take just a minute or two to finish running, and the result should be

an x1.10242.init.nc file and a new log.init_atmosphere.0000.out

file. Assuming no errors were reported at the end of the log.init_atmosphere.0000.out file,

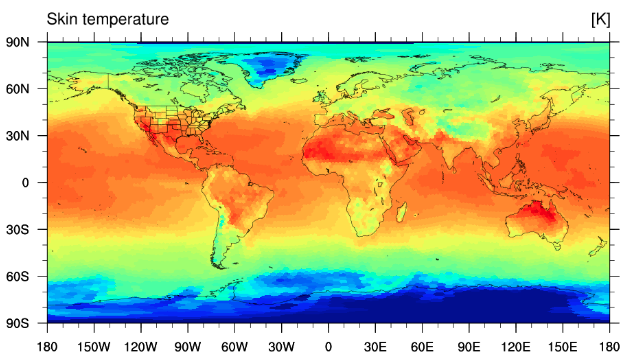

we can plot, e.g., the skin temperature field in the x1.10242.init.nc file using

the plot_tsk.py Python script:

$ cp /glade/p/mmm/wmr/mpas_tutorial/python_scripts/plot_tsk.py .

$ ./plot_tsk.py x1.10242.init.nc

$ display tsk.png

Again, we'll examine the use of Python and NCL scripts for visualization later, so for now we only need

to verify that a plot like the following is produced:

If a plot like the above is produced, then we have completed the generation of an initial conditions file for our

240-km real-data simulation!

Because MPAS-Atmosphere — at least, when run as a stand-alone model — does not

contain prognostic equations for the SST and sea-ice fraction, these fields would remain

constant if not updated from an external source; this is, of course, not realistic, and it

will generally impact the quality of longer model simulations. Consequently, for MPAS-Atmosphere

simulations longer than roughly a week, it is typically necessary

to periodically update the sea-surface temperature (SST) field in the model. For real-time

simulations, this is generally not an option, but it is feasible for retrospective

simulations, where we have observed SST analyses available to us.

In order to create an SST update file, we will make use of

a sequence of intermediate files containing SST and sea-ice analyses that are available

in /glade/p/mmm/wmr/mpas_tutorial/met_data. Before proceeding, we'll link all of these SST intermediate

files into our working directory:

$ ln -sf /glade/p/mmm/wmr/mpas_tutorial/met_data/SST* .

Following Section 8.1 of the MPAS-Atmosphere Users' Guide, we must edit the namelist.init_atmosphere

file to specify the range of dates for which we have SST data, as well as the frequency at which the SST

intermediate files are available. The key namelist options that must be set are shown below;

other options can be ignored.

&nhyd_model

config_init_case = 8

config_start_time = '2014-09-10_00:00:00'

config_stop_time = '2014-09-20_00:00:00'

/

&data_sources

config_sfc_prefix = 'SST'

config_fg_interval = 86400

/

&preproc_stages

config_static_interp = false

config_native_gwd_static = false

config_vertical_grid = false

config_met_interp = false

config_input_sst = true

config_frac_seaice = true

/

Note in particular that we have set the config_init_case variable to 8! This is

the initialization case used to create surface update files, instead of real-data initial

conditions files.

As before, we also need to edit the streams.init_atmosphere file, this time setting the

name of the SST update file to be created by the "surface" stream, as well as the frequency

at which this update file should contain records:

<immutable_stream name="surface"

type="output"

filename_template="x1.10242.sfc_update.nc"

filename_interval="none"

packages="sfc_update"

output_interval="86400"/>

Note that we have set both the filename_template and output_interval attributes

of the "surface" stream. The output_interval should match the interval specified in the namelist

for the config_fg_interval variable. The other streams ("input", "output", and "lbc") can remain

unchanged — the input file should still be set to the name of the static file.

After setting up the namelist.init_atmosphere and streams.atmosphere

files, we're ready to run the init_atmosphere_model program by submitting our init_real.pbs script again:

$ qsub init_real.pbs

As before, we can check on the status of our job periodically by running

$ qstat -u $USER

After the job completes, as before, the log.init_atmosphere.0000.out file should report

that there were no errors. Additionally, the file x1.10242.sfc_udpate.nc file should have

been created.

We can confirm that the x1.10242.sfc_udpate.nc contains the requested valid times for the

SST and sea-ice fields by printing the "xtime" variable from the file; in MPAS, the "xtime" variable

in a netCDF file is always used to store the times at which records in the file are valid.

$ ncdump -v xtime x1.10242.sfc_update.nc

This ncdump command should print the following times:

xtime =

"2014-09-10_00:00:00 ",

"2014-09-11_00:00:00 ",

"2014-09-12_00:00:00 ",

"2014-09-13_00:00:00 ",

"2014-09-14_00:00:00 ",

"2014-09-15_00:00:00 ",

"2014-09-16_00:00:00 ",

"2014-09-17_00:00:00 ",

"2014-09-18_00:00:00 ",

"2014-09-19_00:00:00 ",

"2014-09-20_00:00:00 " ;

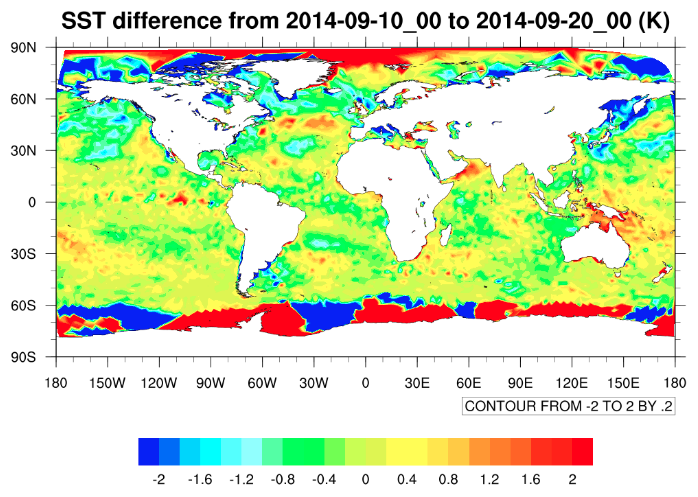

If the times shown above were printed, then we have successfully created an SST and sea-ice

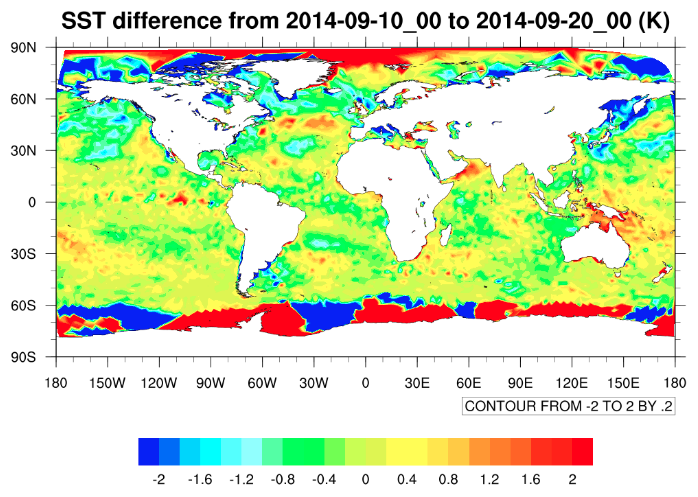

update file for MPAS-Atmosphere. As a final check, we can use

the Python script from /glade/p/mmm/wmr/mpas_tutorial/python_scripts/plot_delta_sst.py to plot the difference in

the SST field between the last time in the surface update file and the first time in the file.

Because the surface update file contains no information about the latitude and longitude of

grid cells, we provide two command-line arguments when running this script: one to give

the name of the grid file, which contains cell latitude and longitude fields, and the second to

specify the surface update file:

$ cp /glade/p/mmm/wmr/mpas_tutorial/python_scripts/plot_delta_sst.py .

$ ./plot_delta_sst.py x1.10242.static.nc x1.10242.sfc_update.nc

$ display delta_sst.png

This script may take a minute or so to run.

In this plot, we have masked out the SST differences over land, since the values of the field

over land are not representative of actual SST differences, but may represent differences in,

e.g., skin temperature or 2-m air temperature, depending on the source of the SST analyses.

Assuming that an initial condition file and a surface update file have been

created, we're ready to run the MPAS-Atmosphere model itself!

Working from our 240km_uniform directory, we'll begin by creating

a symbolic link to the atmosphere_model executable that we compiled

in /glade/scratch/${USER}/mpas_tutorial/MPAS-Model. We'll also make copies of the namelist.atmosphere

and streams.atmosphere files as well. Recall from when we compiled the model that there are

stream_list.atmosphere.* files that accompany the streams.atmosphere

file; we'll copy these, too.

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/atmosphere_model .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/namelist.atmosphere .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/streams.atmosphere .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/stream_list.atmosphere.* .

You'll also recall that there were many look-up tables and other data files that are needed by various

physics parameterizations in the model. Before we can run MPAS-Atmosphere, we'll need to create symbolic links

to these files as well in our working directory:

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/src/core_atmosphere/physics/physics_wrf/files/* .

Before running the model, we will need to edit the namelist.atmosphere file,

which is the namelist for the MPAS-Atmosphere model itself. The default

namelist.atmosphere is set up for a 120-km mesh; in order to run the model

on a different mesh, there is one key parameter that depends on the model resolution:

- config_dt — the model integration time step (delta-t), in seconds.

As will be described in the lectures, the model integration step is generally chosen based on

the finest horizontal resolution in a simulation domain.

To tell the model the date and time at which integration will begin (important, e.g., for computing

solar radiation parameters), and to specify the length of the integration to be run, there are

two other parameters that must be set for each simulation:

- config_start_time — the start time of the integration.

- config_run_duration — the length of the integration.

Lastly, when running the MPAS-Atmosphere model in parallel, we must tell the model the prefix

of the filenames that contain mesh partitioning information for different MPI task counts:

- config_block_decomp_file_prefix — the file prefix (to be suffixed with processor count)

of the file containing mesh partitioning information.

Accordingly, we must edit at least these parameters in the namelist.atmosphere file; other

parameters are described in Appendix B of the MPAS-Atmosphere Users' Guide, and do not necessarily

need to be changed:

&nhyd_model

config_dt = 1200.0

config_start_time = '2014-09-10_00:00:00'

config_run_duration = '5_00:00:00'

/

&decomposition

config_block_decomp_file_prefix = 'x1.10242.graph.info.part.'

/

For a relatively coarse mesh like the 240-km mesh, we can also call the radiation schemes less frequently

to allow the model to run a little more quickly. Let's set the radiation calling interval to 1 hour:

&physics

config_radtlw_interval = '01:00:00'

config_radtsw_interval = '01:00:00'

/

After changing the parameters shown above in the namelist.atmosphere file, we must also set

the name of the initial condition file, the name of the surface update file, and the interval

at which we would like to read the surface update file in the streams.atmosphere file:

<immutable_stream name="input"

type="input"

filename_template="x1.10242.init.nc"

input_interval="initial_only"/>

<stream name="surface"

type="input"

filename_template="x1.10242.sfc_update.nc"

filename_interval="none"

input_interval="86400">

<file name="stream_list.atmosphere.surface"/>

</stream>

Having set up the namelist.atmosphere and streams.atmosphere files,

we're almost ready to run the model in parallel. You'll recall from the lectures that we need to supply

a graph partition file to tell MPAS how the horizontal domain is partitioned among MPI tasks. Accordingly,

we'll create a symbolic link to the partition file for

36 MPI tasks for the 240-km mesh in our working directory:

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/meshes/x1.10242.graph.info.part.36 .

As a general rule, a mesh with N

grid columns should be run on at most N/160 processors in MPAS-Atmosphere to make

efficient use of the processors. So, for the mesh in this exercise, which has 10242 grid

columns, we could in principle use up to about 64 MPI tasks while still making relatively

efficient use of the computing resources. For this exercise, however, we will use a single,

full Cheyenne node with 36 cores.

Now, we should be able to run MPAS using 36 MPI tasks. Before submitting a job with qsub,

we can copy a prepared job script to our working directory.

$ cp /glade/p/mmm/wmr/mpas_tutorial/job_scripts/run_model.pbs .

$ qsub run_model.pbs

We can check on the status of our job with qstat -u $USER. Once the model

begins to run, it will write information about its progress to

the log.atmosphere.0000.out file. We can use tail -f

to follow the updates to the log file as the model runs:

$ tail -f log.atmosphere.0000.out

If the model has started up successfully and begun to run, we should see messages

like this

Begin timestep 2014-09-10_06:00:00

--- time to run the LW radiation scheme L_RADLW =T

--- time to run the SW radiation scheme L_RADSW =T

--- time to run the convection scheme L_CONV =T

--- time to apply limit to accumulated rainc and rainnc L_ACRAIN =F

--- time to apply limit to accumulated radiation diags. L_ACRADT =F

--- time to calculate additional physics_diagnostics =F

split dynamics-transport integration 3

global min, max w -0.177687 0.512867

global min, max u -112.127 112.008

Timing for integration step: 5.78077 s

written for each timestep that the model takes. If not, a log.atmosphere.0000.err

file may have been created, and if so, it may have messages to indicate what might have gone wrong.

The model simulation should take less than about a five minutes to complete.

As the model runs, you may notice

that several netCDF output files are being created:

- diag.2014.09.*.nc — These files contain mostly 2-d diagnostic fields that were listed in the stream_list.atmosphere.diagnostics file.

- history.2014.09.*.nc — These files contain mostly 3-d prognostic and diagnostic fields from the model.

- restart.2014.09.*.nc — These are model restart files that are essentially checkpoints of the model state; a simulation can be re-started from any of these checkpoint/restart files.

If the model has successfully begun running, we can move to the next sub-section to obtain and compile

the convert_mpas utility for interpolating model output files to a lat-lon

grid for quick visualization.

We saw in earlier sections that Python scripts may be used to visualize fields in MPAS

netCDF files. Although these scripts can produce publication-quality figures, many

times all that is needed is a way to quickly inspect the fields in a file.

The convert_mpas program is designed to interpolate

fields from the unstructured MPAS mesh to a regular lat-lon grid for easy checking,

e.g., with ncview.

As with the MPAS-Model code, the convert_mpas code may be

obtained most easily by cloning a GitHub repository. Working from our /glade/scratch/${USER}/mpas_tutorial directory,

we'll download the code in this way:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ git clone https://github.com/mgduda/convert_mpas.git

$ cd convert_mpas

The convert_mpas utility is compiled via a simple Makefile.

In general, one can edit the Makefile in the main convert_mpas

directory.

We can build the convert_mpas utility by simply running make

with no command-line arguments:

$ make

If compilation was successful, there should now be an executable file named convert_mpas.

So that we can run this program from any of our working directories, we'll add our current directory to our

shell ${PATH} environment variable:

$ export PATH=/glade/scratch/${USER}/mpas_tutorial/convert_mpas:${PATH}

You may like to take a few minutes to read through the README.md file to familiarize

yourself with the usage of the convert_mpas program. We'll have a chance to exercise

the convert_mpas utility in the next section.

In the previous section, we were able to make a five-day, real-data simulation on

a 240-km quasi-uniform mesh. In this section, we'll practice preparing a variable-resolution,

240km – 48km mesh with the grid_rotate tool, after which we'll

proceed as we did for the quasi-uniform case to process static, terrestrial fields on this

variable-resolution mesh.

As we saw in the first section, processing the static fields can take some time, so while

this processing is taking place, we'll practice using the convert_mpas

tool to interpolate output from our 240-km quasi-uniform simulation to a lat-lon grid for

quick visualization.

Once the static field processing has completed for the 240km – 48km variable-resolution mesh,

we'll interpolate the initial conditions fields and start a simulation on this mesh. This

simulation will take about half an hour to complete, so we'll want to get this running so that it

will have completed by the next section.

A common theme with MPAS is the need to obtain source code from git

repositories hosted on GitHub. The grid_rotate utility

is no exception in this regard. One difference, however, is that the grid_rotate

code is contained in a repository that houses many different tools for MPAS cores,

including the MPAS-Albany Land Ice core, the MPAS-Ocean core, and the MPAS-Sea Ice core.

The grid_rotate utility is found in the MPAS-Tools repository,

which we can clone into our mpas_tutorial working directory

with the following commands:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ git clone https://github.com/MPAS-Dev/MPAS-Tools.git

Within the MPAS-Tools repository, the grid_rotate code resides

in the mesh_tools/grid_rotate sub-directory. We can change to

this directory and build the grid_rotate

utility with the make command.

$ cd MPAS-Tools/mesh_tools/grid_rotate

$ make

So that we can more easily run the grid_rotate program from any working directory, we'll add

our current working directory to our shell ${PATH}.

$ export PATH=/glade/scratch/${USER}/mpas_tutorial/MPAS-Tools/mesh_tools/grid_rotate:${PATH}

Now that we have a means of rotating the location of refinement in a variable-resolution MPAS

mesh, we can begin the process of preparing such a mesh. For this tutorial, we'll be using

a mesh that refines from 240-km grid spacing down to about 48-km grid spacing over an elliptic

region.

When creating initial conditions and running the 240-km quasi-uniform simulation, we worked

in a sub-directory named 240km_uniform. For our variable-resolution

simulation, we'll work in a sub-directory named 240-48km_variable

to keep our work separated. Let's create the 240-48km_variable directory

and link in the variable-resolution mesh from /glade/p/mmm/wmr/mpas_tutorial/meshes into that directory:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ mkdir 240-48km_variable

$ cd 240-48km_variable

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/meshes/x5.30210.grid.nc .

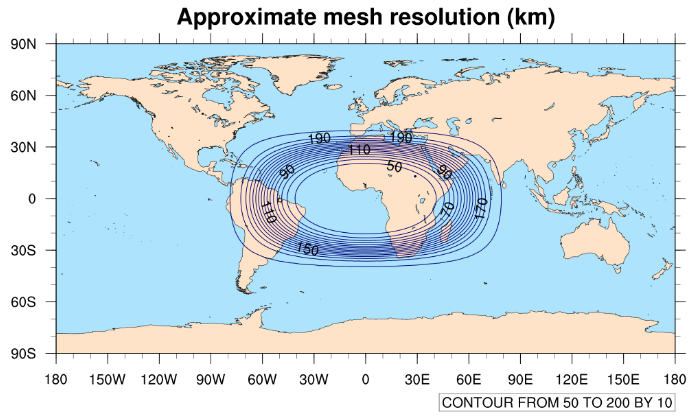

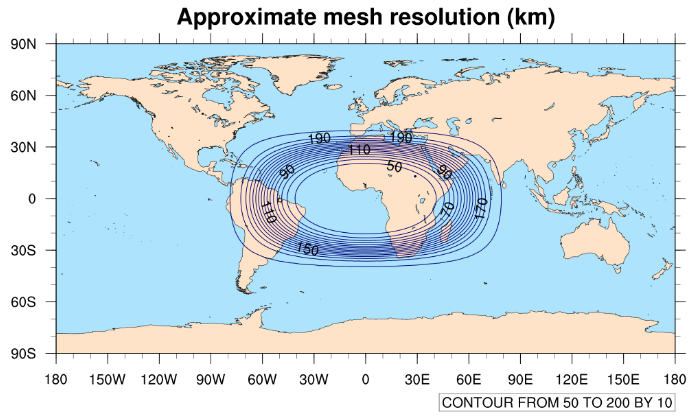

The x5.30210.grid.nc mesh contains 30210 grid cells and refines by

a factor of five (from 240-km grid spacing to 48-km grid spacing). The refinened region is

centered over (0.0 lat, 0.0 lon), and we can get a quick idea of the size of the refinement

by plotting contours of the approximate mesh resolution with

the mesh_resolution.ncl NCL script:

$ cp /glade/p/mmm/wmr/mpas_tutorial/ncl_scripts/mesh_resolution.ncl .

$ export FNAME=x5.30210.grid.nc

$ ncl mesh_resolution.ncl

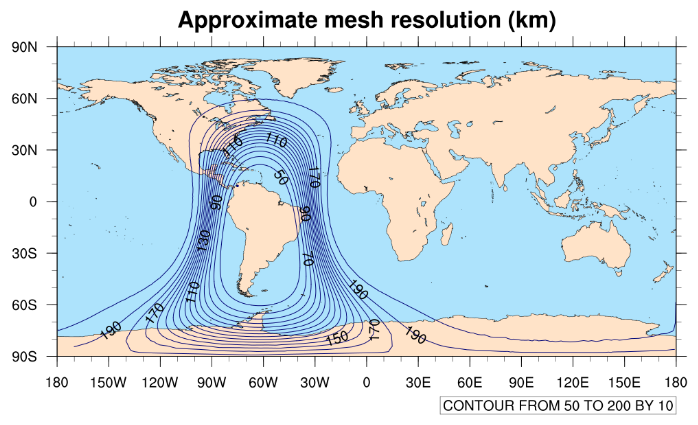

When running the mesh_resolution.ncl script, a plot like

the one below should be produced in a file named 'mesh_resolution.png', and which

you can view with the display command:

$ display mesh_resolution.png

Using the grid_rotate tool, we can change the location and orientation

of this refinement to an area of our choosing. Before running the grid_rotate

program, we need a copy of the input namelist that is read by this program:

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Tools/mesh_tools/grid_rotate/namelist.input .

As described in the lectures, we'll set the parameters in the namelist.input

file to relocate the refined region in the mesh. For example, to move the refinement over South America,

we might use:

&input

config_original_latitude_degrees = 0

config_original_longitude_degrees = 0

config_new_latitude_degrees = -19.5

config_new_longitude_degrees = -62

config_birdseye_rotation_counter_clockwise_degrees = 90

/

Feel free to place the refinement wherever you would like!

After making changes to the namelist.input file, we can rotate

the x5.30210.grid.nc file to create a new "grid" file with any name

we choose. For example:

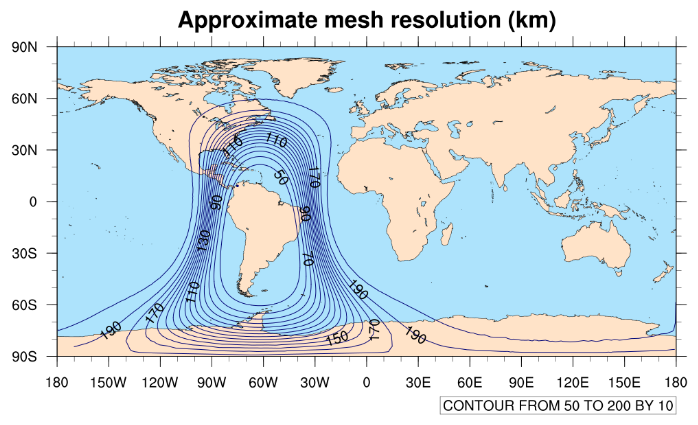

$ grid_rotate x5.30210.grid.nc SouthAmerica.grid.nc

As with the original x5.30210.grid.nc file, we can plot the approximate

resolution of our rotated grid file with commands like the following:

$ export FNAME=SouthAmerica.grid.nc

$ ncl mesh_resolution.ncl

Of course, the name SouthAmerica.grid.nc should be replaced with the name that

you have chosen for your rotated grid when setting the FNAME environment

variable. In our example, the resulting plot looks like the one below.

It may take some iteration to get the refinement just where you would like it. You can go back and

edit namelist.input file, then re-run the grid_rotate

program, and finally, re-run the ncl mesh_resolution.ncl command until

you are satisfied!

Interpolating static, geographical fields to a variable-resolution MPAS mesh works

in essentially the same way as it does for a quasi-uniform mesh. So, the steps taken

in this section should seem familiar — they mirror what we did in Section 1.3!

To begin the process, we'll symbolically link the init_atmosphere_model

executable into our 240-48km_variable directory, and we'll copy

the default namelist.init_atmosphere and streams.init_atmosphere

files as well:

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/init_atmosphere_model .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/namelist.init_atmosphere .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/streams.init_atmosphere .

As before, we'll set the following variables in the namelist.init_atmosphere file:

&nhyd_model

config_init_case = 7

/

&data_sources

config_geog_data_path = '/glade/p/mmm/wmr/mpas_tutorial/mpas_static/'

config_landuse_data = 'MODIFIED_IGBP_MODIS_NOAH'

config_topo_data = 'GMTED2010'

config_vegfrac_data = 'MODIS'

config_albedo_data = 'MODIS'

config_maxsnowalbedo_data = 'MODIS'

config_supersample_factor = 3

/

&preproc_stages

config_static_interp = true

config_native_gwd_static = true

config_vertical_grid = false

config_met_interp = false

config_input_sst = false

config_frac_seaice = false

/

In editing the streams.init_atmosphere file, we'll choose

the filename for the "input" stream to match whatever name we chose for our rotated

grid file:

<immutable_stream name="input"

type="input"

filename_template="SouthAmerica.grid.nc"

input_interval="initial_only"/>

and we'll set the filename for the "output" stream to follow the same

naming convention, but using "static" instead of "grid":

<immutable_stream name="output"

type="output"

filename_template="SouthAmerica.static.nc"

packages="initial_conds"

output_interval="initial_only" />

With these changes, we're ready to run the init_atmosphere_model program.

In order to submit the job to the queuing system, we can copy the same prepared job script as in

Section 1.3 and submit it with qsub:

$ cp /glade/p/mmm/wmr/mpas_tutorial/job_scripts/init_real.pbs .

$ qsub init_real.pbs

As before, processing of the static, geographical fields may take roughly 30 – 45 minutes to complete.

While the static, geographical fields are being processed for our 240km – 48km variable-resolution

mesh, we can experiment with the use of the convert_mpas utility to interpolate

output from our 240-km, quasi-uniform simulation.

If you didn't have a chance to download and compile the convert_mpas program

back in Section 2.4, you will need to go back to that section before proceeding with the rest of this

section.

We'll change directories to the 240km_uniform directory, and we

may also need to add the directory containing the convert_mpas utility to our

shell ${PATH} variable again:

$ cd /glade/scratch/${USER}/mpas_tutorial/240km_uniform

$ export PATH=/glade/scratch/${USER}/mpas_tutorial/convert_mpas:${PATH}

In the 240km_uniform directory, we should see output files from MPAS that

are named history*nc and diag*nc:

$ ls -l history*nc diag*nc

-rw-r--r-- 1 duda ncar 4525528 Apr 8 23:56 diag.2014-09-10_00.00.00.nc

-rw-r--r-- 1 duda ncar 4525528 Apr 8 23:56 diag.2014-09-10_03.00.00.nc

...

-rw-r--r-- 1 duda ncar 4525528 Apr 8 23:59 diag.2014-09-14_21.00.00.nc

-rw-r--r-- 1 duda ncar 4525528 Apr 8 23:59 diag.2014-09-15_00.00.00.nc

-rw-r--r-- 1 duda ncar 94449804 Apr 8 23:56 history.2014-09-10_00.00.00.nc

-rw-r--r-- 1 duda ncar 94449804 Apr 8 23:56 history.2014-09-10_06.00.00.nc

...

-rw-r--r-- 1 duda ncar 94449804 Apr 8 23:59 history.2014-09-14_18.00.00.nc

-rw-r--r-- 1 duda ncar 94449804 Apr 8 23:59 history.2014-09-15_00.00.00.nc

As a first try, we can interpolate the fields from the final model "history" file,

history.2014-09-15_00.00.00.nc, to a 0.5-degree lat-lon grid

with the command:

$ convert_mpas history.2014-09-15_00.00.00.nc

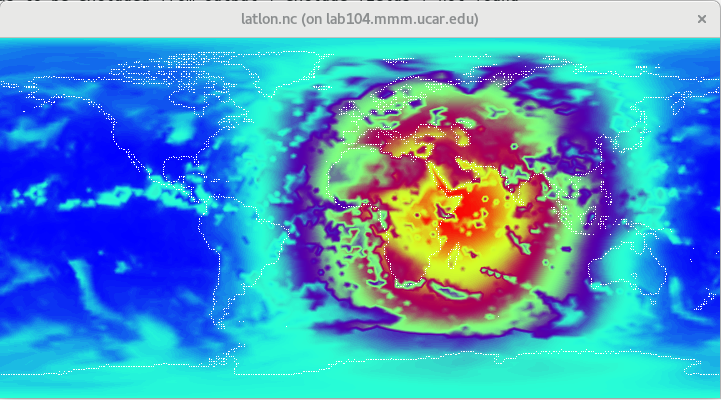

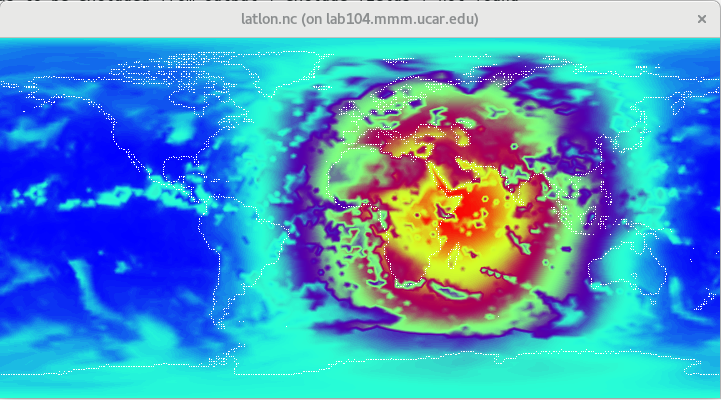

The interpolation should take less than a minute, and the result should be a file named latlon.nc.

Let's take a look at this file with the ncview utility:

$ ncview latlon.nc

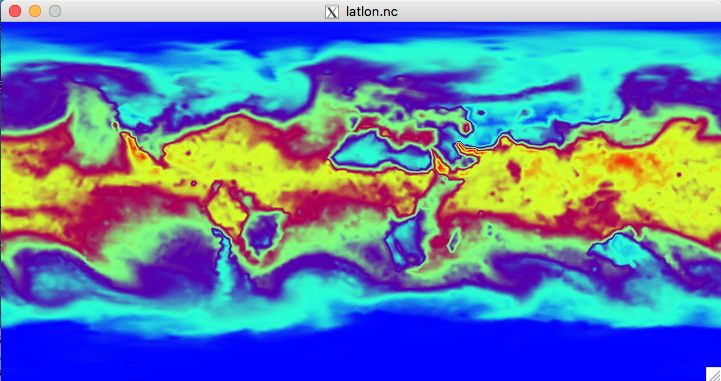

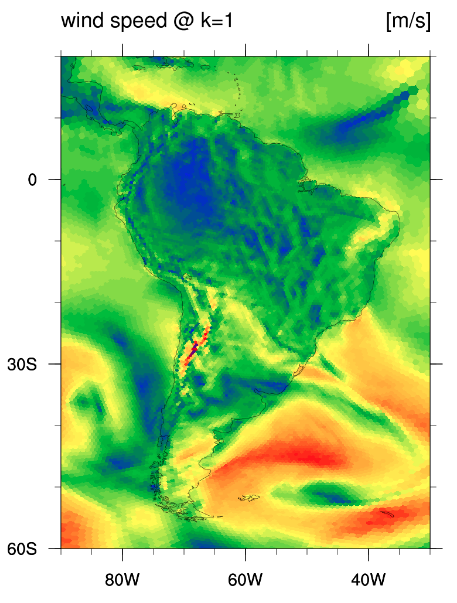

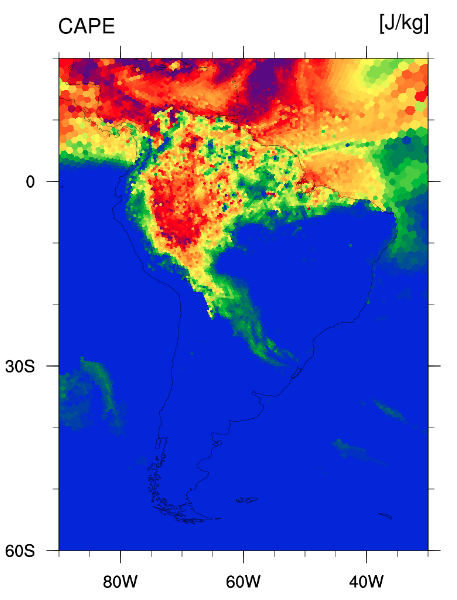

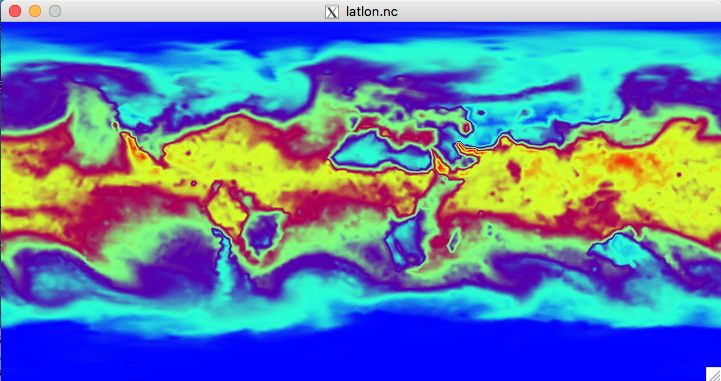

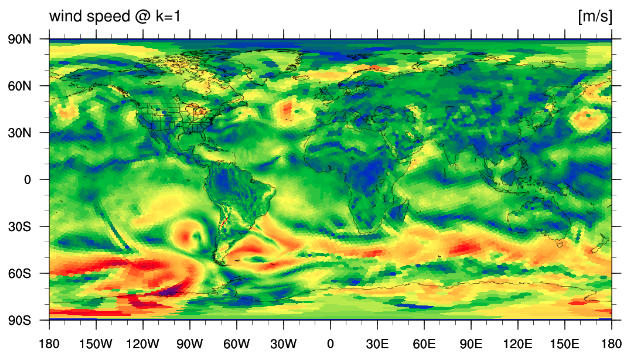

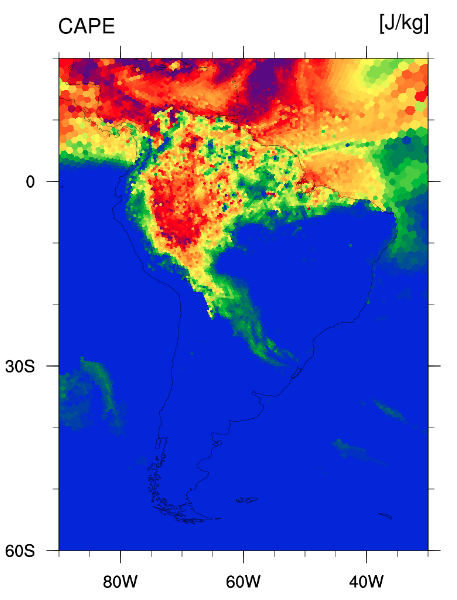

After launching ncview we can, e.g., view the qv field, which may look something

like the plot below at the lowest model level.

Feel free to browse some of the other fields in the file by selecting them from the "2d vars" and "3d vars" buttons.

All of the fields are described in Appendix D of the User's Guide.

When we're done viewing the latlon.nc file, we'll delete it. The convert_mpas

utility attempts to append to existing latlon.nc files when converting a new MPAS netCDF file,

and this often doesn't work as expected, so to avoid confusion, it's best to remove the

latlon.nc file we just created with

$ rm latlon.nc

before converting another MPAS output file.

As we just saw, it can take upwards of a minute to interpolate a single MPAS "history" file. If we are only interested

in a small subset of the fields in an MPAS file, we can restrict the interpolation to just those fields by providing

a file named include_fields in our working directory.

Using an editor of your choice, create a new text file named include_fields with the following

contents:

u10

v10

precipw

After saving the file, we can re-run the convert_mpas program as before to produce

a latlon.nc file with just the 10-meter surface winds and precipitable water:

$ convert_mpas history.2014-09-15_00.00.00.nc

$ ncview latlon.nc

The convert_mpas program should have taken just a few seconds to run; if not, make

sure the latlon.nc file was deleted before re-running convert_mpas.

The only fields in the new latlon.nc file should be "precipw", "u10", and "v10".

Rather than converting just a single file at a time, we can convert multiple MPAS output files and write the results

to the same latlon.nc file. The key to doing this is to use the first command-line argument

to convert_mpas to specify a file that contains horizontal (SCVT) mesh information, and to

let subsequent command-line arguments specify MPAS netCDF files that contain fields to be remapped.

To interpolate the "precipw", "u10", and "v10" fields for our entire 5-day simulation, we can use the following

commands:

$ rm latlon.nc

$ convert_mpas x1.10242.init.nc history.*.nc

$ ncview latlon.nc

Note, again, that the first command-line argument to convert_mpas is only used to obtain

SCVT mesh information by the convert_mpas program when multiple command-line arguments

are given, and all remaining command-line arguments specify netCDF files with fields to be interpolated!

When viewing a netCDF file with multiple time records, you can step through the time periods in

ncview with the "forward" and "backward" buttons to the right and left

of the "pause" button:

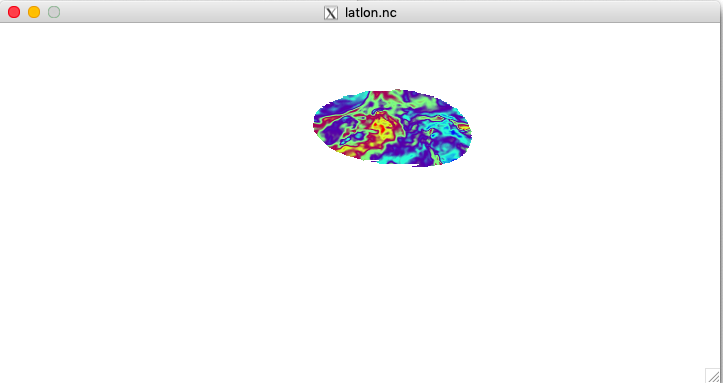

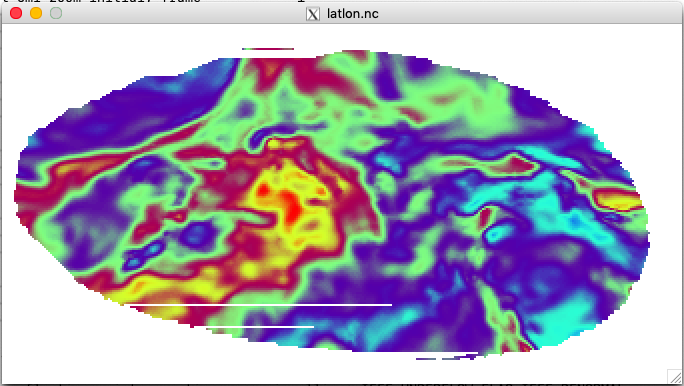

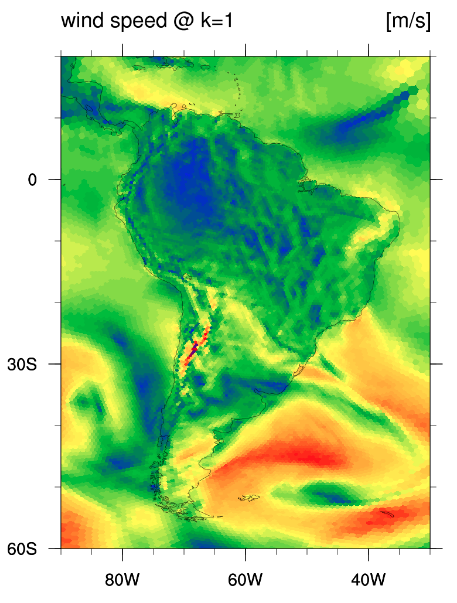

As a final exercise in viewing MPAS output via the convert_mpas and ncview

programs, we'll zoom in on a part of the globe, looking at the 250 hPa vertical vorticity field over East Asia on a 1/10-degree

lat-lon grid.

To do this, we will first need to edit the include_fields file so that it contains

just the following line:

vorticity_250hPa

Then, we'll create a new file named target_domain with the editor of our choice. This file

should contain the following lines:

startlat=30

endlat=60

startlon=90

endlon=150

nlat=300

nlon=600

After editing the include_fields file and creating the target_domain

file, we can remove the old latlon.nc file and use the convert_mpas

program to interpolate the 250 hPa vorticity field from our diag.*.nc files:

$ rm latlon.nc

$ convert_mpas x1.10242.init.nc diag*nc

$ ncview latlon.nc

If the processing of the static, geographical fields for the 240km – 48km variable-resolution mesh has not

finished, yet, you can feel free to experiment more with the convert_mpas program!

Once the processing of the static, geographical fields has completed in the 240-48km_variable

directory, we should have a new "static" file as well as a log.init_atmosphere.0000.out

file. In our example, the static file is named SouthAmerica.static.nc, but your

file should have whatever name was specified in the definition of the "output" stream in

the streams.init_atmosphere file.

Before proceeding, it's a good idea to verify that there were no errors reported in

the log.init_atmosphere.0000.out file. We can also make a plot of the terrain height

field as we did for the 240-km quasi-uniform simulation:

$ cp /glade/p/mmm/wmr/mpas_tutorial/python_scripts/plot_terrain.py .

$ ./plot_terrain.py SouthAmerica.static.nc

Once we have convinced ourselves that the static, geographical processing was successful, we can proceed as

we did for the 240-km quasi-uniform simulation. We'll create a symbolic link to the ERA5

intermediate file valid at 0000 UTC on 10 September 2014:

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/met_data/ERA5:2014-09-10_00 .

As before, we'll set up the namelist.init_atmosphere file to instruct

the init_atmosphere_model program to interpolate meteorological and land-surface

initial conditions:

&nhyd_model

config_init_case = 7

config_start_time = '2014-09-10_00:00:00'

/

&dimensions

config_nvertlevels = 41

config_nsoillevels = 4

config_nfglevels = 38

config_nfgsoillevels = 4

/

&data_sources

config_met_prefix = 'ERA5'

config_use_spechumd = false

/

&vertical_grid

config_ztop = 30000.0

config_nsmterrain = 1

config_smooth_surfaces = true

config_dzmin = 0.3

config_nsm = 30

config_tc_vertical_grid = true

config_blend_bdy_terrain = false

/

&interpolation_control

config_extrap_airtemp = 'lapse-rate'

/

&preproc_stages

config_static_interp = false

config_native_gwd_static = false

config_vertical_grid = true

config_met_interp = true

config_input_sst = false

config_frac_seaice = true

/

Besides the namelist.init_atmosphere file, we must also edit the XML

I/O configuration file, streams.init_atmosphere, to specify the name

of the static file that will serve as input to the init_atmosphere_model program, as well as

the name of the MPAS-Atmosphere initial condition file to be created. Specifically, we must set

the filename_template for the "input" stream to the name of our static file:

<immutable_stream name="input"

type="input"

filename_template="SouthAmerica.static.nc"

input_interval="initial_only"/>

and we must set the name of the initial condition file to be created in the "output" stream:

<immutable_stream name="output"

type="output"

filename_template="SouthAmerica.init.nc"

packages="initial_conds"

output_interval="initial_only" />

After editing the namelist.init_atmosphere and streams.atmosphere

files, and linking the intermediate file to our run directory, we can run

the init_atmosphere_model

program with qcmd:

$ qcmd -A UMMM0004 -- ./init_atmosphere_model

The init_atmosphere_model program should take just a few minutes to run.

Once the init_atmosphere_model program finishes, as always, it is best to verify that there were

no error messages reported in the log.init_atmosphere.0000.out file. We should also have a file

whose name matches the filename we specified for the "output" stream in the streams.init_atmosphere

file. In our example, we should have a file named SouthAmerica.init.nc.

If all was successful, we are ready to run a variable-resolution MPAS simulation. For this simulation, we won't prepare

an SST and sea-ice surface update file for the model; however, if you would like to try doing so following what was done

in Section 2.2, feel free to do so!

Assuming that an initial condition file, and, optionally, a surface update file have been

created, we're ready to run a variable-resolution, 240km – 48km simulation with refinement

over a part of the globe that interests us.

As a first step, you may recall that we need to create symbolic links to the atmosphere_model

executable, as well as to the physics look-up tables, into our working directory:

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/atmosphere_model .

$ ln -s /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/src/core_atmosphere/physics/physics_wrf/files/* .

We will also need copies of the namelist.atmosphere,

streams.atmosphere, and stream_list.atmosphere.*

files:

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/namelist.atmosphere .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/streams.atmosphere .

$ cp /glade/scratch/${USER}/mpas_tutorial/MPAS-Model/stream_list.atmosphere.* .

Next, we will need to edit the namelist.atmosphere file. Recall from

before that the default namelist.atmosphere is set up for a 120-km mesh;

in order to run the model on a different mesh, there is one key key parameter that depends on

the model resolution:

- config_dt — the model integration time step (delta-t), in seconds.

The model timestep, config_dt must be set to a value that is appropriate

for the smallest grid distance in the mesh. In our case, we need to choose a time step that is

suitable for a 48-km grid distance; a value of 240 seconds is reasonable. In the lecture on MPAS

dynamics we will say more about how to choose the model timestep.

To tell the model the date and time at which integration will begin, and to specify the length

of the integration to be run, there are two other parameters that must be set for each simulation:

- config_start_time — the start time of the integration.

- config_run_duration — the length of the integration.

In order to make maximal use of the time between this practical session and the next, we'll try

to run a 5-day simulation starting on 2014-09-10_00.

Lastly, when running the MPAS-Atmosphere model in parallel, we must tell the model the prefix

of the filenames that contain mesh partitioning information for different MPI task counts:

- config_block_decomp_file_prefix — the file prefix (to be suffixed with processor count)

of the file containing mesh partitioning information.

For the 240km – 48km variable-resolution mesh, we can find a mesh partition file with 36 partitions

at /glade/p/mmm/wmr/mpas_tutorial/meshes/x5.30210.graph.info.part.36. Let's symbolically link that file

to our run directory now before specifying a value of 'x5.30210.graph.info.part.'

for the config_block_decomp_prefix in our namelist.atmosphere file.

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/meshes/x5.30210.graph.info.part.36 .

Before reading further, you may wish to try making the changes to the namelist.atmosphere

yourself. Once you've done so, you can verify that the options highlighted below match the changes you have made.

&nhyd_model

config_dt = 240.0

config_start_time = '2014-09-10_00:00:00'

config_run_duration = '5_00:00:00'

/

&decomposition

config_block_decomp_file_prefix = 'x5.30210.graph.info.part.'

/

After changing the parameters shown above in the namelist.atmosphere file, we must also set

the name of the initial condition file in the streams.atmosphere file:

<immutable_stream name="input"

type="input"

filename_template="SouthAmerica.init.nc"

input_interval="initial_only"/>

Optionally, if you decided to create an SST update file for this variable-resolution simulation

in the previous section, you can also edit the "surface" stream in the streams.atmosphere

file to specify the name of that surface update file, as well as the interval at which the model

should read updates from it:

<stream name="surface"

type="input"

filename_template="SouthAmerica.sfc_update.nc"

filename_interval="none"

input_interval="86400">

<file name="stream_list.atmosphere.surface"/>

</stream>

Having set up the namelist.atmosphere and streams.atmosphere

files, we're ready to run the model in parallel.

We can copy the same job script as we used for the quasi-uniform

240-km simulation and submit it as usual with qsub.

$ cp /glade/p/mmm/wmr/mpas_tutorial/job_scripts/run_model.pbs .

$ qsub run_model.pbs

Once the model begins to run, you may like to "tail" the log.atmosphere.0000.out

file with the "-f" option to follow the progress of the MPAS simulation:

$ tail -f log.atmosphere.0000.out

If the model has started up successfully and begun to run, we should see messages

like this:

Begin timestep 2014-09-10_00:12:00

--- time to run the LW radiation scheme L_RADLW =F

--- time to run the SW radiation scheme L_RADSW =F

--- time to run the convection scheme L_CONV =T

--- time to apply limit to accumulated rainc and rainnc L_ACRAIN =F

--- time to apply limit to accumulated radiation diags. L_ACRADT =F

--- time to calculate additional physics_diagnostics =F

split dynamics-transport integration 3

global min, max w -1.06611 0.722390

global min, max u -117.781 118.251

Timing for integration step: 4.18681 s

This simulation will take about half an hour to run. Now may be a convenient time to ask any questions that you may have!

Having gained experience in running global simulations, we are now well positioned to

run a limited-area (i.e., a regional) simulation with MPAS-Atmosphere. We will begin

by defining the simulation domain using the MPAS-Limited-Area tool to cover any part

of the globe that interests us. Then, we will subset the "static" fields from an existing

60-km quasi-uniform static file.

Once we are satisfied with the geographic coverage of our regional static fields, we

will interpolate initial and lateral boundary conditions from the 0.5-degree CFSv2 datset.

Given ICs and LBCs for our regional domain, we can begin running a 3-day regional

simulation from 2019-09-01 0000 UTC through 2019-09-04 0000 UTC.

After our simulation has run (or, even after the first few model output files are

available), we will practice using NCL and the convert_mpas tool to visualize

the regional output.

To begin the work of defining a regional simulation domain for MPAS-Atmosphere, we will

first obtain a copy of the MPAS-Limited-Area tool. As with the MPAS model code and MPAS-Tools

code, we will clone the MPAS-Limited-Area repository from GitHub. From within our /glade/scratch/${USER}/mpas_tutorial

directory, we can do this with the following commands:

$ cd /glade/scratch/${USER}/mpas_tutorial

$ git clone https://github.com/MPAS-Dev/MPAS-Limited-Area.git

After changing to the MPAS-Limited-Area directory that

should have been created by the git clone command, we

can prepare a file describing our desired regional domain. Depending on the type

of region that we intend to define (circular, elliptical, channel, or polygon),

we can use any of the example region definition files in the docs/points-examples/ sub-directory as a starting point.

For the purpose of illustration, we'll use the japan.ellipse.pts

file from docs/points-examples/ as a starting point to set up

an elliptical domain over the Mediterranean Sea; however, you may set up your

regional domain over any other part of the globe using any of the available region types.

After copying this file to a file named mediterranean.pts in our

current working directory with

$ cp docs/points-examples/japan.ellipse.pts mediterranean.pts

we can edit the file so that its contents look like the following:

Name: Mediterranean

Type: ellipse

Point: 37.9, 18.0

Semi-major-axis: 3200000

Semi-minor-axis: 1700000

Orientation-angle: 100

Again, you can set up any alternative regional domain as you prefer — the Mediterranean

domain is only used here as an example!

To aid in locating your regional domain, it may be helpful to use

OpenStreetMap, where you can right-click

anywhere on a map and choose "Show address" to find the latitude and longitude of a point.

Having prepared a region definition file, we can use the create_region

tool to create a subset of an existing global "static" file for our specified region. We can symbolically

link a global, quasi-uniform, 60-km static file to our working directory with:

$ ln -s /glade/p/mmm/wmr/mpas_tutorial/meshes/x1.163842.static.nc .

Then, we can create a regional static file with a command like:

$ ./create_region mediterranean.pts x1.163842.static.nc

The create_region tool should take just a few seconds to run, and the result

should be a new static file and a new graph.info file, named according to

the "Name" we specified in our region defintion file, e.g,:

Mediterranean.static.nc

Mediterranean.graph.info

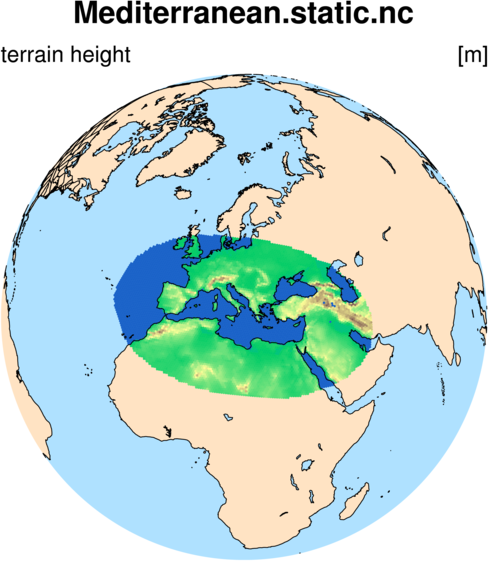

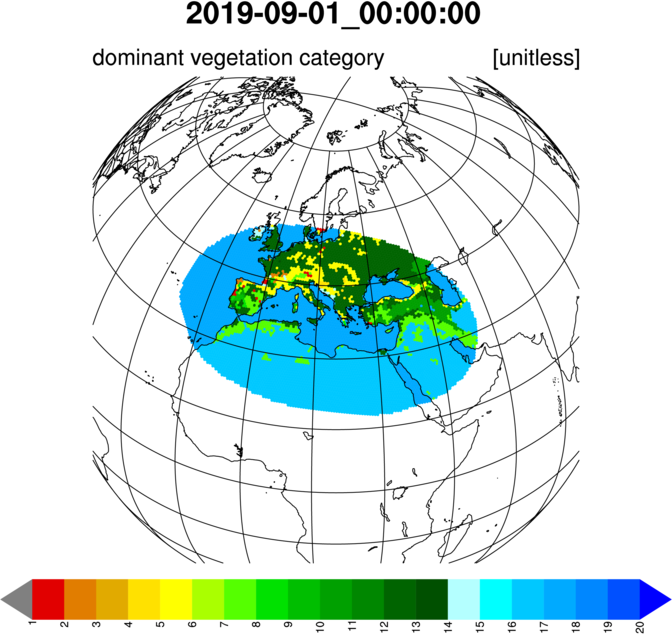

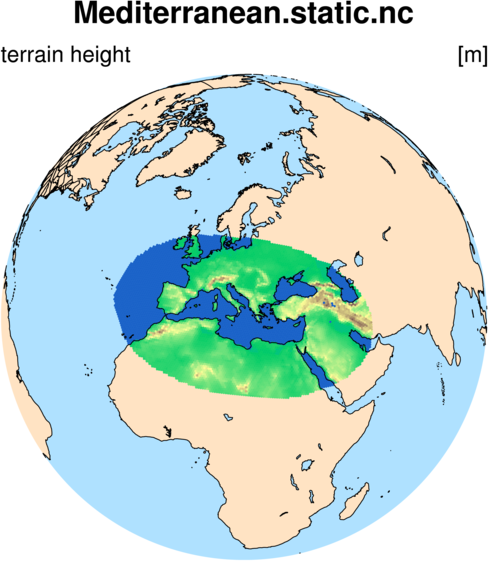

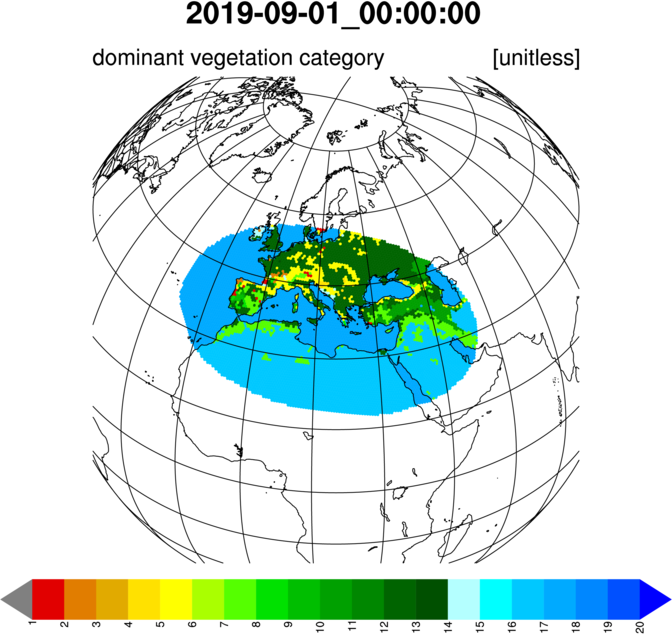

At this point, we may like to plot one of the time-invariant, terrestrial fields from our new regional

static file. We can plot the terrain height field using an NCL script, regional_terrain.ncl,

which we will first copy to our working directory: