Running WRF¶

By default, the WRF model is a fully compressible, nonhydrostatic model with a hybrid vertical hydrostatic pressure coordinate (HVC), and Arakawa C-grid staggering. It uses the Runge-Kutta 2nd- and 3rd-order time integration schemes, and 2nd- to 6th-order advection schemes in both horizontal and vertical directions. It uses a time-split small step for acoustic and gravity-wave modes. The dynamics conserve scalar variables.

WRF model code contains the following programs:

real.exe or ideal.exe

An initialization program for either real-data (*real.exe*) or idealized data (*ideal.exe*)

wrf.exe

A numerical integration program

ndown.exe

A program allowing one-way nesting for domains run separately

tc.exe

A program for tropical storm bogussing

Version 4 of WRF supports the following capabilities:

Real-data and idealized simulations

Various lateral boundary condition options

Full physics options, with various filters

Positive-definite advection scheme

Hydrostatic runtime option

Terrain-following vertical coordinate option

One-way, two-way, and moving nest options

Three-dimensional analysis nudging

Observation nudging

Regional and global applications

Digital filter initialization

Vertical refinement for a nested domain

Idealized Cases¶

WRF Idealized Simulation

A WRF model simulation of an “idealized,” simplified, and controlled environment.

To run an ideal simulation, WRF must be compiled for one of the available ideal test cases. The following two executables are built in the WRF/main directory, and are linked to WRF/test/em_[case], where [case] refers to the specific ideal simulation.

- ideal.exe

The Idealized Case Initialization program for the test case

- wrf.exe

The primary numerical integration program

Note

Backward Compatibility

By default, all ideal cases use moist potential temperature (use_theta_m=1), and the WRF model uses a hybrid vertical coordinate (hybrid_opt=1). To use wrfinput files generated with WRF code prior to v4.0 as input to wrf.exe, set the following in the specified records in the namelist.input file found in wrf/test/em_[case]:

Turn off the moist theta option in the &dynamics record (use_theta_m=0).

Turn off the hybrid vertical coordinate in the &dynamics record (hybrid_opt=0)

Set force_use_old_data=.true. in the &time_control record

The following instructions apply to the “3-D Baroclinic Wave Case,” but can be adapted for other ideal cases. Commands are issued in a terminal window.

Move to the test case directory.

cd WRF/test/em_b_wave

Edit the namelist.input file to set integration length, output frequency, domain size, timestep, physics options, and other parameters (see README.namelist in the WRF/run directory, or Namelist Variables), then save the file.

Run the ideal initialization program.

For a “serial” code build:

./ideal.exe >& ideal.log

For code built for parallel computing (e.g., dmpar):

mpirun -np 1 ./ideal.exe

Note

ideal.exe must only be run with a single processor (denoted by “-np 1”), even if built for parallel computing. Later, wrf.exe may be run with multiple processors.

ideal.exe typically reads an input sounding available in the case directory, and generates an initial condition file, wrfinput_d01. Ideal cases do not require a lateral boundary file because boundary conditions are handled by namelist options. Successful completion is indicated by SUCCESS COMPLETE IDEAL INIT at the end of the ideal.log (or rsl.out.0000 for parallel execution).

Run the WRF model.

For a “serial” code build:

./wrf.exe >& wrf.log

For code built for parallel computing (where here 8 processors are requested):

mpirun -np 8 ./wrf.exe

Upon successful completion, SUCCESS COMPLETE WRF appears at the end wrf.log (or rsl.out.0000 for parallel execution). The following files are generated based on user settings:

- rsl.out.* and rsl.error.*

(MPI runs only) pairs of WRF standard out and error files, one each for each processor

- wrfout_d0x_YYYY-MM-DD_hh:mm:ss

WRF model output, where x denotes the domain ID, and the remaining variables represent the date and time for which the file is valid

- wrfrst_d0x_YYYY-MM-DD_hh:mm:ss

Files used to restart a simulation. These are created if restart_interval in namelist.input is within total simulation time. Here, x denotes the domain ID, and the remaining variables represent the date and time for which the file is valid.

See Idealized Case Initialization for additional information on idealized cases.

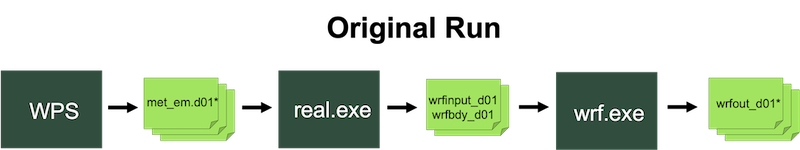

Real-data Case¶

WRF Real-data Simulation

A 3-D model simulation that uses real static geographical data (e.g., landuse) from reputable surveying projects, along with a previously-run external atmospheric analysis or forecast model (e.g., GFS) to provide initial and boundary conditions for the WRF simulation

To run WRF for a real-data case:

Move to the working directory.

cd WRF/test/em_real

Note

Real data cases can also be run in the WRF/run directory.

Before running a real-data case, successfully run the WRF Preprocessing System (WPS) to produce met_em.* files for input to the real.exe program. Link these files to the WRF running directory.

ln -sf ../../../WPS/met_em* .

Edit the namelist.input file for cases specifics. Set dates and domain dimensions to match those set in WPS. For a single domain, only the first column is read - it is not necessary to remove additional columns.

Run the real-data initialization program using commands based on the compile type.

For WRF built for serial computing, or OpenMP (smpar)

./real.exe >& real.log

For WRF built for parallel computing (dmpar) - an example requesting to run with four processors

mpiexec -np 4 ./real.exe

Upon successful completion, real_em: SUCCESS EM_REAL INIT is printed to the end of real.log (or rsl.out.0000 file for dmpar simulation) and wrfinput_d0* files (one per domain) and a wrfbdy_d01 file are generated. These files are used as input to the wrf.exe program.

Run the WRF model.

For WRF built for serial computing, or OpenM (smpar)

./wrf.exe >& real.log

For WRF built for parallel computing (dmpar) - an example requesting to run with four processors

mpiexec -np 4 ./wrf.exe

Upon successful completion, SUCCESS COMPLETE WRF is printed to the end of the wrf.log file (or rsl.out.0000 for parallel execution). The following files are generated based on user settings:

- rsl.out.* and rsl.error.*

(MPI runs only) pairs of WRF standard out and error files, one each per processor

- wrfout_d0x_YYYY-MM-DD_hh:mm:ss

WRF output, where x denotes the domain ID, and the remaining variables represent the date and time for which the file is valid; multiple wrfout files may be generated and may include multiple time periods, depending on namelist settings for history_interval and frames_per_outfile.

- wrfrst_d0x_YYYY-MM-DD_hh:mm:ss

Files used to restart a simulation. These are created if restart_interval in namelist.input is within the total simulation time. Here, x denotes the domain ID, and the remaining variables represent the date and time for which the file is valid.

Use “ncdump” to check the times written to any netCDF output file, using the following syntax (using the actual name of a single file):

ncdump -v Times wrfout_d0x_YYYY-MM-DD_hh:mm:ss

Restart Option¶

WRF Restart Simulation

An option that allows extending a simulation over multiple shorter runs, which is useful, for e.g., when the full simulation exceeds available wallclock time for queueing systems. The final results are identical to a single, continuous run, with exceptions noted in WRF Known Problems & Solutions.

To initiate a restart:

Before the initial simulation set restart_interval in namelist.input (in minutes) to be less than the simulation’s length. This prompts wrf.exe to generate a restart file at that interval, named wrfrst_d<domain>_<date>, with the date/time representing the time when the restart file is valid.

- After the initial simulation, if a valid wrfrst_d<domain>_<date> file exists, modify the namelist by setting:

start_time = the restart time (<date> of the restart file)

restart = .true.

Run wrf.exe as usual.

The following additional namelist options are available for restarts:

override_restart_timers=.true. |

Use this if the history and/or restart interval are modified prior to the restart and the new output times are not as expected (in &time_control) |

write_hist_at_0h_rst=.true. |

If WRF history/output is desired at the initial time of the restart simulation (in &time_control) |

Note

Restart files, often much larger than WRF history/output files, may fail to write in netCDF format due to size. Try setting io_form_restart=102 (instead of 2) to write the restart file in multiple pieces, one per processor. Restart the model using the same number of processors.

WRF Nesting¶

Nested Simulation

A simulation in which a coarse-resolution domain (parent) contains at least one embedded finer-resolution domain (child)

Nested domains can be simulated simultaneously or separately. The nest receives data driven along its lateral boundaries from its parent, and depending on the namelist setting for feedback, the nest may also provide data back to the parent.

See the WPS section on Nested Domains to determine whether to use a nest.

The following nesting options are available:

Basic Nesting¶

WRF simulations with basic nesting use multiple domains at different grid resolutions. These run simultaneously, communicating via one-way or two-way nesting, determined by the feedback namelist setting. When building WRF, the “basic” nest option (option 1) is chosen during configuation.

- Two-way Nesting

feedback=1

The coarser (parent) domain provides boundary data for the higher-resolution nest (child), and the nest feeds its calculations back to the coarser domain. The model can handle multiple domains at the same nest level (no overlapping nests), and multiple nest levels (telescoping).- One-way Nesting

feedback=2

Communication is only conducted from the parent domain to the child domain. There is no feedback to the parent.

Nesting options are declared in the namelist. Edit multi-column variables and do not add columns to single-column parameters to avoid errors. Start with the default namelist and modify key namelist variables:

Note

See Namelist Variables for additional descriptions of the following variables.

feedback |

this determines whether the simulation is a two-way or one-way nested run. |

start_* |

start and end simulation times for each domain |

input_from_file |

set to .true. or .false., indicating whether a nest requires an input file (e.g. wrfinput_d02); typically for real data cases that include nest topography and land information. |

fine_input_stream |

determines which fields (defined in Registry/Registry.EM_COMMON) from the nest input file are used in nest initialization; typically includes static fields (e.g., terrain and landuse), and masked surface fields (e.g., skin temperature, soil moisture and temperature); useful for a nest starting at a later time than the coarse domain. |

max_dom |

the total number of domains to process |

grid_id |

domain identifier used in the wrfout naming convention. Set the most coarse grid to 1. |

parent_id |

indicates each nests’s parent domain; set as the grid_id value of the parent (e.g., d02’s parent is d01, so parent_id for column two should be set to 1). |

i_parent_start |

lower-left corner starting indices of the nest domain within its parent; set to the same values used in namelist.wps. |

parent_grid_ratio |

integer grid size (resolution) ratio of the child domain to its parent; odd ratios (3:1 and 5:1) are recommended for real-data applications |

parent_time_step_ratio |

integer time-step ratio for the nest, which can be different from the parent_grid_ratio, though they are typically set the same. |

smooth_option |

a smoothing option for the parent domain in the area of the nest if feedback=1. Available options:: 0 = no smoothing; 1 = 1-2-1 smoothing; 2 = smoothing-desmoothing. |

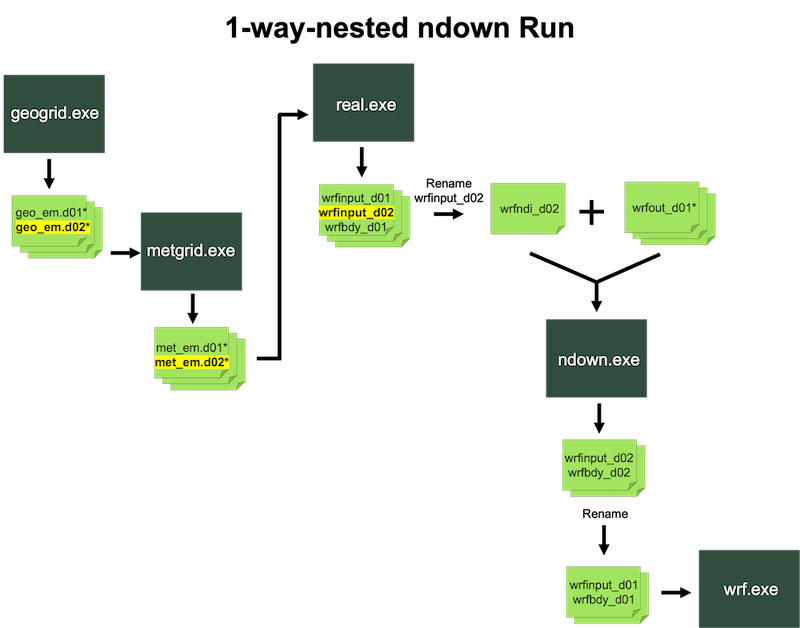

Ndown One-way Nesting¶

The ndown program facilitates one-way nesting by running a finer-resolution domain after a coarser domain is run. The ndown.exe executable (built during the WRF compile) generates initial and lateral boundary conditions for the fine-grid run, from the coarse-grid output, incorporating high-resolution terrestrial data (e.g., terrain, land use) and masked surface fields (e.g., soil temperature, moisture). Ndown can be useful for the following scenarios:

A long simulation is followed by a decision to embed a higher-resolution nest.

Multiple nests exist, and finer-resolution domains have significantly more grid points than parent domains, requiring separate runs due to processor limitations. See Choosing an Appropriate Number of Processors for details.

Running Ndown¶

Note

Using ndown requires the code to be compiled for nesting.

Obtain output from a single coarse grid WRF simulation.

Frequent output (e.g. hourly) from the coarse grid run is recommended for better boundary specifications.

Run geogrid.exe and metgrid.exe for two domains.

Run real.exe for 2 domains.

This step ingests high-resolution terrestrial and land-water masked soil fields.

Move to the wrf/test/em_real directory and link-in the met_em* files.

cd wrf/test/em_real ln -sf <path-to-WPS-directory>/met_em* .

In namelist.input set max_dom=2 and set up columns 1 and 2 for a 2-domain run (e.g., start/end times and grid dimensions).

Run real.exe, which generates initial condition and lateral boundary files wrfinput_d01, wrfinput_d02, and wrfbdy_d01

Run ndown.exe to create fine-grid initial and boundary condition files.

Rename wrfinput_d02 to wrfndi_d02

mv wrfinput_d02 wrfndi_d02

Modify and check the following namelist parameters:

Add io_form_auxinput2=2 to the &time_control record.

interval_seconds should reflect the WRF history/output interval from the coarse domain model run.

Set max_dom=2.

Do not change physics options until after running the ndown program.

(Optional) To refine vertical resolution, set vert_refine_fact (see Namelist Variables for details). Alternatively, use the utility v_interp to refine vertical resolution (see WRF Post-processing, Utilities, & Tools for details).

Run ndown.exe, which uses input from the coarse grid wrfout* file(s), and the wrfndi_d02 file to produce wrfinput_d02 and wrfbdy_d02 files.

./ndown.exe >& ndown.out or mpiexec -np 4 ./ndown.exe

Run the fine-grid WRF simulation.

Rename wrfinput_d02 to wrfinput_d01 and wrfbdy_d02 to wrfbdy_d01

mv wrfinput_d02 wrfinput_d01 mv wrfbdy_d02 wrfbdy_d01

Rename (or move) the original wrfout_d01* files to prevent overwriting.

Modify and check the following namelist parameters.

Move the fine-grid domain settings for e_we, e_sn, dx, and dy from column 2 to column 1.

Set time_step to comply with the fine-grid domain (typically 6*DX).

Set max_dom=1

(Optional) Physics options can be modified at this stage, except the land surface scheme (sf_surface_physics), which have varying soil depths.

(Optional) At this stage, have_bcs_moist=.true. and have_bcs_scalar=.true. may be set to prompt the initial and lateral boundaries to use the moist and scalar arrays. These can only be used when microphysics options are not changed. This provides realistic lateral boundary tendencies for all microphysical variables, unlike simpler zero inflow/gradient outflow options.

Run WRF for this grid.

wrfout_d01* files are generated, and despite the d01 domain ID, they contain data for the fine-resolution domain. Renaming these files may prevent confusion.

Running ndown for Three or more Domains¶

Though ndown supports multiple nests, its hardcoded file names (d01 and d02), require precision when carrying out the following steps:

Note

This example demonstrates nesting down to a 3rd domain (3 domains total), and assumes wrfout_d01* files already exist.

Run geogrid.exe and metgrid.exe for 3 domains.

Run real.exe for 3 domains.

Move to wrf/test/em_real and link-in the met_em* files.

In namelist.input set max_dom=3 and set up columns 1, 2 and 3 for a 3-domain run (e.g., start/end time and grid dimensions).

Run real.exe, which generates initial and lateral boundary files wrfinput_d01, wrfinput_d02, wrfinput_d03, and wrfbdy_d01.

Run ndown.exe to create domain 02’s initial and boundary condition files (see details in the above section).

Run wrf.exe for domain 2 (see details in the above section). wrfout_d01* files are generated, and despite the d01 domain ID, they contain data for d02.

Run ndown.exe to generate domain 03’s initial and boundary condition files.

Rename wrfinput_d03 to wrfndi_d02 (this expected name for this program)

Modify and check the following namelist parameters:

Set io_form_auxinput2 = 2 in &time_control.

Set interval_seconds to reflect the WRF history/output interval from the coarse domain model run.

Set max_dom=2.

Move the values for i_parent_start and j_parent_start from column 3 to column 2. Keep the value set to =1 for column 1.

Do not change physics options until after running the ndown program.

Run ndown.exe, which uses input from the (new) coarse grid wrfout file(s), and the wrfndi_d02 file to produce wrfinput_d02 and wrfbdy_d02 files (both which actually correspond to domain 03).

Run the fine-grid (d03) WRF simulation.

Rename wrfinput_d02 to wrfinput_d01.

Rename wrfbdy_d02 to wrfbdy_d01.

Rename (or move) the wrfout_d01* files to prevent overwriting (recall these files correspond to d02).

Modify and check the following namelist parameters:

Move the fine-grid domain settings for e_we, e_sn, dx, and dy from column 3 (domain 03) to column 1 (domain 01).

Set time_step to comply with the fine-grid domain (typically 6*DX).

Set max_dom=1.

wrf.exe generates wrfout_d01* files, and despite the d01 domain ID, they contain data for the fine-resolution domain (d03). Follow the same format for additional nests, utilizing the same naming convention (always use d01 and d02).

The following figure summarizes data flow for a one-way nested run using the ndown program.

Automatic Moving Nests (Vortex-following)¶

The automatic moving nest (or vortex-following) option tracks a tropical cyclone’s center of low pressure, allowing the nested domain to move within its parent domain. This saves computational cost by eliminating the need for a large, high-resolution nest while effectively tracking the cyclone’s movement.

This option requires WRF to be configured and compiled with vortex-following nesting (option 3) and distributed-memory parallelization option (dmpar) to utilize multiple processors.

Note

WRF compiled for vortex-following does not support the “specified move” or static nested (“basic”) options.

No nest input is needed, but note that this option best performs with a well-developed vortex.

To use non-default values, add and edit the following namelist variables in the &domains record:

vortex_interval |

how often WRF calculates the vortex position, in minutes (default is 15 minutes) |

max_vortex_speed |

used with vortex_interval to compute the search radius for the new vortex center position (default is 40 m/sec) |

corral_dist |

the minimum distance (in coarse grid cells) between a moving nest boundary and its parent domain boundary. This parameter is useful for systems with more than two domains, allowing nested domains (e.g., d03 within d02) to approach the parent’s wall within corral_dist before the parent moves. Note that d01 (the coarsest domain) cannot move. This feature helps center telescoped nests, moving them in sync with a storm. |

track_level |

the pressure level (in Pa) at which the vortex is tracked |

time_to_move |

the time (in minutes) until the nest is moved. This option may help when the storm is still too weak to be tracked by the algorithm. |

When using this option, WRF writes storm information (vortex center location, minimum mean sea-level pressure, maximum 10-m winds) to the standard-out file (e.g. rsl.out.0000). The following command lists these data at 15-minute intervals:

grep ATCF rsl.out.0000

producing something similar to:

ATCF 2007-08-20_12:00:00 20.37 -81.80 929.7 133.9

ATCF 2007-08-20_12:15:00 20.29 -81.76 929.3 133.2

Initial nest location is specified by namelist options i_parent_start and j_parent_start.

Using High-resolution Terrain and Landuse with Vortex-following¶

To incorporate high-resolution terrain and landuse input in a vortex-following simulation (see Chen et al., 2007):

Set the folowing prior to configuring and compiling WRF (this example is for a ‘bash’ shell environment).

export TERRAIN_AND_LANDUSE=1

By default, WPS uses MODIS landuse data, but this option requires landuse data be prepared using USGS static fields. Before running geogrid.exe, in the namelist.wps, set

geog_data_res = 'usgs_30s+default'

Obtain and unpack the following files to a single new directory:

wget https://www2.mmm.ucar.edu/wrf/src/wrf_files/glcc.tar.gz wget https://www2.mmm.ucar.edu/wrf/src/wrf_files/topo.tar.gz

Add the following to the &time_control record in namelist.input before running real.exe and wrf.exe.

input_from_hires = .true., .true., rsmas_data_path = 'terrain_and_landuse_data_directory'

Note

input_from_hires overwrites the input_from_file setting for nest domains.

Set rsmas_data_path to the directory where glcc and topo data files are stored.

Specified Moving Nests¶

The specified moving nest option allows precise control of where the nest moves, but is complex to set up and has specific stipulations.

WRF must be configured and compiled with the preset moves nesting option (option 2), and configured for distributed-memory parallelization (dmpar) to make use of multiple processors.

Only coarse grid input files are required since nest initialization is defined from the coarse grid data.

In addition to standard nesting namelist settings, the following must be added to the &domains record:

Note

Code compiled with the “preset moves” option does not support static nested runs or the vortex-following option.

num_moves |

the total number of moves during the model run. A move of any domain is counted toward this total. The maximum is coded to 50, but can be changed by modifying MAX_MOVES in frame/module_driver_constants.F (recompile WRF to reflect the change, but neither a |

move_id |

a list of nest IDs (one per move) indicating which domain moves for any given move |

move_interval |

the number of minutes from the beginning of the run until the first move, which occurs on the next time step after the specified interval. |

move_cd_x |

distance in the number of grid points and direction of the nest move (positive numbers - move east and north, negative numbers - moving west and south) |

Run-time Options¶

WRF offers special run-time options (see namelist.input file options for a full list). The following options are discussed below:

SST Update¶

SST-update

An option to incorporate higher-resolution time-varying data for sea-surface temperature (SST), vegetation fraction, albedo, and sea ice into the WRF simulation.

Most input data include SST and sea-ice fields, which are often sufficient for shorter simulations since ocean temperatures do not change quickly. However, for WRF simulations of five or more days, it is recommended to use the sst_update option to incorporate time-varying data for sea-surface temperature (SST), vegetation fraction, albedo, and sea ice, as most WRF physics schemes do not predict these fields.

To use this option, obtain additional time-varying SST and sea ice fields for WPS processing (see Using Multiple Meteorological Data Sources). By default, twelve monthly values of vegetation fraction and albedo are processed during geogrid. After WPS, set the following options in the namelist.input &time_control record before running real.exe and wrf.exe:

io_form_auxinput4 = 2

auxinput4_inname = "wrflowinp_d<domain>"

auxinput4_interval = 360, 360, 360

and in the &physics record:

sst_update = 1

real.exe will generate a wrflowinp_d<domain> file, in addition to wrfinput_d0* and wrfbdy_d01.

Note

sst_update cannot be used with sf_ocean_physics or vortex-following options.

Adaptive Time-stepping¶

Adaptive Time-stepping

An option to maximize the WRF model time step while maintaining numerical stablity.

Model time step is adjusted based on the domain-wide horizontal and vertical stability criterion (the Courant-Friedrichs-Lewy (CFL) condition). Sometimes it may be necessary to use a shorter time-step to maintain model stability. This can cause WRF to run much slower at times. To overcome this, the adaptive time-stepping option may be used. The following set of values typically work well and should be set in the &domains namelist record:

use_adaptive_time_step = .true.

- step_to_output_time = .true.

If nest domain output times continue to be incorrect, try using adjust_output_times = .true..

target_cfl = 1.2, 1.2, 1.2 (max_dom)

- max_step_increase_pct = 5, 51, 51 (max_dom)

A large percentage value for the nest allows the nested time step more freedom to adjust

- starting_time_step = -1, -1, -1 (max_dom)

The default value “-1” means 4*DX at start time

- max_time_step = -1, -1, -1 (max_dom)

The default value “-1” means 8*DX at start time

- min_time_step = -1, -1, -1 (max_dom)

The default value “-1” means 3*DX at start time

- adaptation_domain=

An integer value indicating which domain drives the adaptive time step

See Namelist Variables for additional information on these options.

Stochastic Parameterization Schemes¶

WRF Stochastic Parameterization

Parameterization schemes used to represent model uncertainty in ensemble simulations by applying a small perturbation at every time step, to each member

The stochastic parameterization suite comprises a number of stochastic parameterization schemes, some widely used and some developed for very specific applications. Each scheme generates its own random perturbation field, characterized by spatial and temporal correlations and an overall perturbation amplitude, defined in the &stoch namelist.input record.

Random perturbations are generated on the parent domain at every time step and, by default, interpolated to the nested domain(s). The namelist settings determine on which domains these perturbations are applied. For e.g., by setting sppt=0,1,1, perturbations are applied on the nested domains (d02 and d03) only.

Since the scheme uses Fast Fourier Transforms (FFTs; provided in the library FFTPACK), the recommended number of gridpoints in each direction is a product of small primes. Using a large prime in at least one direction may substantially increase computational cost.

Note

All below options are set in an added &stoch” namelist.input record

max_dom indicates a value is needed for each domain

Random Perturbation Field¶

This option generates a 3-D Gaussian random perturbation field for user-implemented applications.

Activate this option by setting (max_dom):

rand_perturb=1,1

The perturbation field is saved in the WRF history/output files (wrfout*) as rand_pert.

Stochastically Perturbed Physics Tendencies (SPPT)¶

A random pattern perturbs accumulated physics tendencies (except those from microphysics) of:

potential temperature

wind, and

humidity

For details on the WRF implementation see Berner et al., 2015.

Activate this option by setting (max_dom):

sppt=1,1

The perturbation field is saved in the WRF history/output files (wrfout*) as rstoch.

Stochastic Kinetic-Energy Backscatter Scheme (SKEBS)¶

A random pattern perturbs:

potential temperature, and

the rotational wind component

Wind perturbations are proportional to the square root of the kinetic-energy backscatter rate, and temperature perturbations are proportional to the potential energy backscatter rate. For details on the WRF implementation see Berner et al., 2011 and WRF Implementation Details and Version history of a Stochastic Kinetic-Energy Backscatter Scheme (SKEBS). By default, parameters apply to synoptic-scale perturbations in the mid-latitudes. Tuning strategies are discussed in Romine et al. 2014 and Ha et al. 2015.

Activate this option by setting (max_dom):

skebs=1,1

The perturbation fields are saved in the WRF history/output files (wrfout*) as:

ru_tendf_stoch* (for u)

rv_tendf_stoch (for v)

rt_tendf_stoch (for θ)

Stochastically Perturbed Parameter Scheme (SPP)¶

A random pattern perturbs parameters in the following selected physics packages:

GF convection scheme

MYNN boundary layer scheme

RUC LSM

Activate this option by setting (max_dom):

spp=1,1

To perturb a single physics package’s parameterization, set spp_conv=1, spp_pbl=1, or spp_lsm=1. For implementation details see Jankov et al..

The perturbation field is saved in the WRF history/output files (wrfout*) as:

pattern_spp_conv*

pattern_spp_pbl*

pattern_spp_lsm*

Stochastic Perturbations to the Boundary Conditions (perturb_bdy)¶

The following two options are available:

- perturb_bdy=1

The stochastic random field perturbs boundary tendencies for wind and potential temperature. This option runs independently of SKEBS (skebs=1) and may be used with or without setting skebs=1, which operates solely on the interior grid. Note that this option generates a domain-size random array, thus computation time may increase.

- perturb_bdy=2

Perturbations to boundary tendencies are introduced using a user-defined pattern. The arrays field_u_tend_perturb, field_v_tend_perturb, and field_t_tend_perturb are initialized and called for this purpose. These arrays need to be populated with the desired pattern in either the spec_bdytend_perturb section in wrf/share/module_bc.F or the spec_bdy_dry_perturb section in wrf/dyn_em/module_bc_em.F. After modifying these files, WRF must be recompiled; neither a

clean -anor a reconfigure is required.

Stochastic perturbations to the boundary tendencies in WRF-CHEM (perturb_chem_bdy)¶

A random pattern perturbs the chemistry boundary tendencies in WRF-Chem. For this application, WRF-Chem must be compiled at the time of the WRF compilation.

Activate this option by setting (max_dom):

rand_perturb=1,1

The perturb_chem_bdy option runs independently of rand_perturb and therefore may be run with or without the “rand_perturb” scheme, which operates solely on the interior grid. However, perturb_bdy_chem=1 requires the generation of a domain-sized random array to apply perturbations in the lateral boundary zone, thus computation time may increase. When running WRF-Chem with have_bcs_chem=.true. in the &chem namelist.input record, chemical LBCs read from wrfbdy_d01 are perturbed with the random pattern created by rand_perturb=1.

WRF-Solar stochastic ensemble prediction system (WRF-Solar EPS)¶

WRF-Solar includes a stochastic ensemble prediction system (WRF-Solar EPS) tailored for solar energy applications (Yang et al., 2021; Kim et al., 2022). The stochastic perturbations can be introduced into variables of six parameterizations, controlling cloud and radiation processes. See details in WRF-Solar EPS website and see the &stoch section in Namelist Variables.

WRF Nudging¶

Three types of nudging are discussed below:

Analysis Nudging

A method to nudge the WRF model toward data analysis for coarse-resolution domains

Spectral Nudging

An upper-air nudging option that selectively nudges only the coarser scales, and is otherwise set up similarly to grid-nudging, but additionally nudges geopotential height.

Observational Nudging

A method to nudge the WRF model toward observations

Note

The DFI option can not be used with nudging options.

Analysis/Grid Nudging (Upper-air and/or Surface)¶

The model incorporates additional nudging terms for horizontal winds, temperature, and water vapor. These terms perform a point-by-point nudging to a 3D analysis field that has been interpolated in both space and time.

Using Analysis Nudging

Run WPS as usual to prepare input data to WRF.

If nudging in the nest domains, process all time periods for all domains.

If using surface-analysis nudging, ater running metgrid, run OBSGRID, which outputs a wrfsfdda_d01 file that is required by WRF for this option.

Set the following in &fdda in namelist.input before running real.exe. (max_dom) indicates a value should be applied for each domain you wish to nudge.

grid_fdda=1

turns on analysis nudging (max_dom)

gfdda_inname=’wrffdda_d<domain>’

the name of the file to be written out by the real program

gfdda_interval_m

time interval of input data in minutes (max_dom)

gfdda_end_h

end time of grid-nudging in hours (max_dom)

The following additional options can be set (see examples.namelist in the test/em_real directory of the WRF code for details):

io_form_gfdda=2

analysis data I/O format (2=netcdf)

fgdt

calculation frequency (mins) for grid-nudging (0=every time step; (max_dom))

if_no_pbl_nudging_uv

1=no nudging of u and v in the PBL, 0=nudging in the PBL (max_dom)

if_no_pbl_nudging_t

1=no nudging of temperature in the PBL, 0=nudging in the PBL (max_dom)

if_no_pbl_nudging_q

1=no nudging of qvapor in the PBL, 0=nudging in the PBL (max_dom)

guv=0.0003

nudging coefficient for u and v (sec-1) (max_dom)

gt=0.0003

nudging coefficient for temperature (sec-1) (max_dom)

gq=0.00001

nudging coefficient for qvapor (sec-1) (max_dom)

if_ramping

0=nudging ends as a step function; 1=ramping nudging down at the end of the period

dtramp_min

time (mins) for the ramping function (if_ramping)

If using surface analysis nudging, set:

grid_sfdda=1

turns on surface analysis nudging (max_dom)

sgfdda_inname=”wrfsfdda_d<domain>”

the defined name of the input file from OBSGRID.

sgfdda_interval_m

time interval of input data in minutes

sgfdda_end_h

end time of surface grid-nudging in hours

Alternatively, grid_sfdda=2 nudges surface air temperature and water vapor mixing ratio (as with grid_sfdda=1), but uses tendencies generated from the direct nudging approach to constrain surface sensible and latent heat fluxes, thus ensuring thermodynamic consistency between the atmosphere and land surface. This option works with the YSU PBL and the Noah LSM. (Alapaty et al., 2008).

Run real.exe, which creates a wrffdda_d0* file (in addition to wrfinput_d0* and wrfbdy_d01) to be used by wrf.exe.

Note

For additional guidance, see Steps to Run Analysis Nudging, along with the test/em_real/examples.namelist file provided with the WRF code.

Spectral Nudging¶

Set the following namelist.input parameters to use this option. max_dom indicates a value should be applied for each domain:

grid_fdda=2 |

turns on spectral nudging (max_dom) |

xwavenum = 3 |

defines the number of waves in the domain’s x-direction; this is the maximum wave that is nudged |

ywavenum = 3 |

defines the number of waves in the domain’s y-direction; this is the maximum wave that is nudged |

Observational Nudging¶

When using this option, similar to Analysis/Grid Nudging (Upper-air and/or Surface), WRF uses extra nudging terms for horizontal winds, temperature, and water vapor; however, in obs-nudging, points near observations are nudged based on model error at the observation site. This option is suitable for fine-scale or asynoptic observations. For details, see the Observation Nudging Users Guide, Experimental Nudging Options, and README.obs_fdda in WRF/test/em_real/.

Using Observational Nudging

Standard WPS input, plus station observation files generated with OBSGRID are required. OBSGRID outputs OBS_DOMAIN10* (for domain 1), OBS_DOMAIN20* (for domain 2), etc. These files must be concatenated into a single file per domain - for e.g., OBS_DOMAIN101 for d01.

Observation nudging is then activated during WRF, using the following &fdda namelist settings. (max_dom) indicates the a value should be applied for each domain you wish to nudge.

obs_nudge_opt=1 |

turns on observational nudging (max_dom) |

fdda_start |

obs nudging start time in minutes (max_dom) |

fdda_end |

obs nudging end time in minutes (max_dom) |

And the following should be set in the &time_control record:

auxinput11_interval_s |

interval in seconds for observation data; set to an interval small enough to include all observations |

Below are additional namelist options (set in the &fdda record):

max_obs=150000 |

The maximum number of observations used on a domain during any given time window |

obs_nudge_wind |

set to 1 to nudge wind, 0=off (max_dom) |

obs_coef_wind=6.E-4 |

nudging coefficient for wind (s-1) (max_dom) |

obs_nudge_temp |

set to 1 to nudge temperature, 0=off (max_dom) |

obs_coef_temp=6.E-4 |

nudging coefficient for temperature (s-1) (max_dom) |

obs_nudge_mois |

set to 1 to nudge water vapor mixing ratio, 0=off (max_dom) |

obs_coef_mois=6.E-4 |

nudging coefficient for water vapor mixing ratio (s-1) (max_dom) |

obs_rinxy=240. |

horizontal radius of influence in km (max_dom) |

obs_rinsig=0.1 |

vertical radius of influence in eta |

obs_twindo=0.6666667 |

half-period time window over which an observation will be used for nudging, in hours (max_dom) |

obs_npfi=10 |

frequency in coarse grid timesteps for diagnostic prints |

obs_ionf=2 |

frequency in coarse grid timesteps for observational input and error calculation (max_dom) |

obs_idynin |

set to 1 to turn on the option to use a “ramp-down” function for dynamic initialization, to gradually turn off FDDA before the pure forecast |

obs_dtramp=40. |

time period in minutes over which nudging is ramped down from one to zero, when obs_idynin=1 is set |

obs_prt_freq=10 |

frequency in obs index for diagnostic printout (max_dom) |

obs_prt_max=1000 |

maximum allowed obs entries in diagnostic printout |

obs_ipf_errob=.true. |

true=print obs error diagnostics; false=off |

obs_ipf_nudob=.true. |

true=print obs nudge diagnostics; false=off |

obs_ipf_in4dob=.true. |

true=print obs input diagnostics; false=off |

Note

For additional information and namelist options related to nudging, see the examples.namelists file in the WRF code’s test/em_real directory.

Digital Filter Initialization¶

Digital Filter Initialization (DFI)

A method to remove initial model imbalance as, for example, measured by the surface pressure tendency.

If interested in the 0-6 hour simulation/forecast, the DFI option can be helpful. It runs a digital filter during a short model integration, backward and forward, before initiating the forecast. This, plus the full model run is completed as a single simulation. DFI can be used for multiple domains with concurrent nesting, when feedback is disabled.

Using DFI

There are no special data preparation requirements - run WPS per usual.

Open the example.namelist file from the wrf/test/em_real/ directory. Copy and paste the &dfi_control section into the namelist.input file, adapting the settings for your specific case. The following are typical options:

dfi_opt=3

the DFI option used; 0=no DFI; 1=digital filter launch; 2=diabatic DFI; 3=twice DFI - option 3 is recommended; set to 0 for restarts

dfi_nfilter=7

the digital filter type used; 0=uniform; 1=Lanczos; 2=Hamming; 3=Blackman; 4=Kaiser; 5=Potter; 6=Dolph window; 7=Dolph; 8=recursive high-order; option 7 is recommended

dfi_cutoff_seconds=3600

cutoff period (in seconds) for the filter (no longer than the filter window)

dfi_write_filtered_input=.true.

option to produce a filtered initial condition file (wrfinput_initialized_d01) when running wrf

Typically, time specifications are configured for integration to proceed backward for 30 to 60 minutes and forward for half that duration. The namelist options are:

dfi_bckstop_year

dfi_bckstop_month

dfi_bckstop_day

dfi_bckstop_hour

dfi_bckstop_minute

dfi_bckstop_second

dfi_fwdstop_year

dfi_fwdstop_month

dfi_fwdstop_day

dfi_fwdstop_hour

dfi_fwdstop_minute

dfi_fwdstop_second

For a constant boundary condition, set constant_bc=.true. in the &bdy_control namelist record.

To use a DFI time step that differs from the standard time_step, set time_step_dfi.

Note

DFI can not be used with nudging options.

DFI can not be used with multi_bdy_files=.true..

Bucket Options¶

WRF Bucket Options

Options to maintain accuracy in rainfall accumulation (RAINC, RAINNC) and/or radiation budget accumulation (ACSWUPT, ACLWDNBC) during months- to years-long simulations.

Using 32-bit accuracy, adding small numbers to very large numbers can lead to accuracy loss over time, especially in long simulations (months to years) where small additions may be lost. To mitigate this, activating bucket options stores part of the term as an integer, incrementing when the bucket value is reached.

Water Accumulation¶

In the &physics namelist record, set bucket_mm* (water accumulation bucket reset value in mm). During wrf.exe, the following two terms are produced:

RAINNC

I_RAINNC

where RAINNC only contains the remainder. Use the following equation to retrieve the total from the output:

total = RAINNC + bucket_mm * I_RAINNC

A reasonable bucket value may be based on a monthly accumulation (e.g., 100 mm). Use the following equation to find total precipitation:

total precipitation = RAINC + RAINNC, where

Total RAINNC = RAINNC + bucket_mm * I_RAINNC

Total RAINC = RAINC + bucket_mm * I_RAINC

Radiation Accumulation¶

In the &physics namelist record, set bucket_J (energy accumulation bucket reset value in Joules). Radiation accumulation terms (e.g., ACSWUPT) are in Joules/m2; their mean value over a simulation period is the difference divided by the time, yielding W/m2. A typical bucket_J value for monthly accumulation is 1.e9 J, calculated as follows (example for ACSWUPT - other radiative terms are similar):

total = ACSWUPT+bucket_J*I_ACSWUPT

Global Simulations¶

Note

For global simulations, users are strongly encouraged to use the NSF NCAR MPAS model instead of WRF.

Global WRF modeling is not commonly used or tested. Some physics and diffusion options may not work well with polar filters, and positive-definite/monotonic advection options are incompatible with polar filters in a global run due to the potential for negative scalar values. WRF-Chem cannot be run with positive-definite and monotonic options in a global setup.

Using a Global Domain

Run WPS, starting with the namelist template namelist.wps.global.

Set map_proj=’lat-lon’, and grid dimensions e_we and e_sn. Save the file and copy it to the name namelist.wps

dx and dy do not need to be set. Geogrid calculates grid distances values found in the global attribute section of geogrid output files (geo_em.d0*).

Run geogrid.exe.

Use the command

ncdump -h geo_em.d01.ncto see the grid distances to set for dx and dy in WRF’s namelist.input. Grid distances in the x and y directions may differ, but should be set similarly. WRF and WPS assume the earth is a sphere, with a radius of 6370 km. There are no restrictions on grid dimensions, but for effective use of WRF’s polar filter, set the east-west dimension to 2P*3Q*5R+1 (where P, Q, and R are any integers, including 0).Run the remaining WPS programs for only a single time period.

Run real.exe for a single time period. Because the domain is global, lateral boundary conditions (wrfbdy_d01) are not needed.

Copy namelist.input.global to namelist.input, adapting it to your configuration.

Run wrf.exe.

I/O Quilting¶

The I/O Quilting option reserves a few processors to manage output only, which can sometimes be performance-friendly if the domain size is very large, and/or the time taken to write each output is significant when compared to the time taken to integrate the model between output times.

Note

This option should be used with care, only by users highly experienced with computing processes.

To use quilting, set the following in the namelist’s &namelist_quilt record:

nio_tasks_per_group : Number of processors to use per IO group for IO quilting (1 or 2 are typically sufficient)

nio_groups : The number of IO groups (default is 1)

Note

This option is only available for use with wrf.exe. It does not work for real or ndown.