Climate Simulation¶

Climate simulations, spanning months or years, require continuous data like reanalysis or future climate model data. Sea Surface Temperature (SST) is crucial for climate runs. Global atmospheric data often includes SST but may lack resolution. Supplement with higher-resolution SST, ensuring continuous updates.

Since this is just a test exercise, this case only runs for 48 hours for a past-date event.

Important

Save WPS files from your single-domain/nested exercises. In wps create a new folder to store the geo_em*, FILE*, met_em*, and namelist.wps files.

Geogrid¶

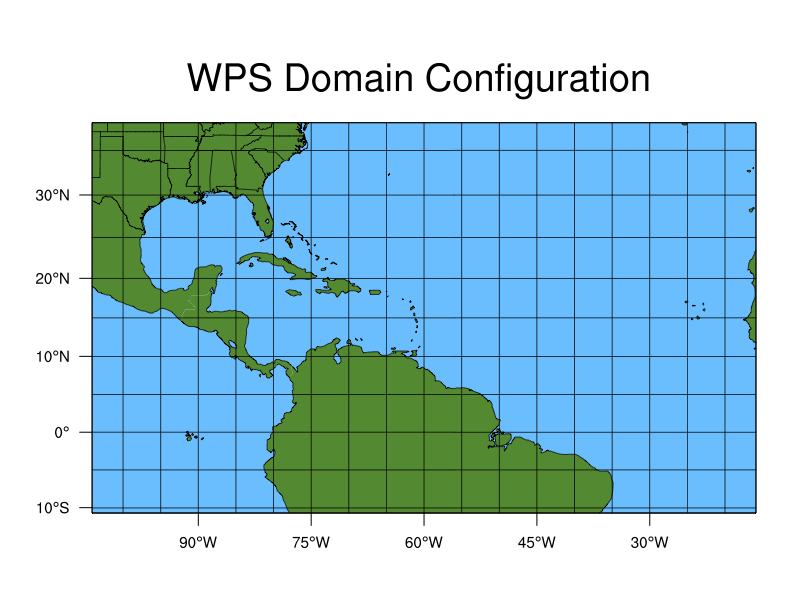

Edit namelist.wps to configure the following domain

A single domain over North America and the North Atlantic

Domain size: 110 x 65 grid points

Projection: mercator (because the domain is closer to the equator)

Grid resolution: 90 km

In addition to the above modification, use the following namelist.wps settings to place the domain in the right location on the Earth:

&geogrid max_dom = 1, ref_lat = 15.00 ref_lon = -60.00 truelat1 = 0.0 truelat2 = 0.0 stand_lon = -60.00

Note

Resolutions finer than 90 km are typically used for climate simulations, but this case uses a coarser setup to save time and computational cost.

Your domain should look like this:

Run geogrid.exe.

Ungrib¶

Ungrib and reformat meteorological data from reanalysis or climate models for the following event:

- Event

Duration : 48 hours

Hurricane Matthew, which made landfall as a category 4 hurricane over Haiti, and then weakened before hitting the U.S. coast of South Carolina.

Dates: 2016-10-06_00:00:00 to 2016-10-08_00:00:00

Because you will be using GFS meteorological input and additional SST data, you will run ungrib twice to convert the data types separately.

Ungrib GFS Data¶

The following describes the GFS Data:

Type |

Global NCEP GFS |

Interval |

Every 3 hours |

Initial Time |

2016-10-06_00:00:00 |

End Time |

2016-10-08_00:00:00 |

Data Directory |

/glade/campaign/mmm/wmr/wrf_tutorial/input_data/climate/ |

File Root |

gfs.0p25.2016100600.f0 |

Vtable |

Vtable.GFS |

Link in the GFS data.

./link_grib.csh /glade/campaign/mmm/wmr/wrf_tutorial/input_data/climate/gfs.0p25.2016100600.f0

Link in the correct Vtable (for GFS input, use Vtable.GFS).

Modify start/end dates/times in namelist.wps.

Because these data are available every 3 hours, set interval_seconds = 10800.

Run ungrib to create intermediate files (resulting files should have the prefix FILE).

Ungrib SST Data¶

The following describes the SST Data:

Type |

Archived global SST GRIB1 data |

Interval |

Daily (every 24 hours) |

Initial Time |

2016-10-06_00:00:00 |

End Time |

2016-10-08_00:00:00 |

Data Directory |

/glade/campaign/mmm/wmr/wrf_tutorial/input_data/sst/ |

File Root |

rtg_sst_grb_hr_0.083.2016100 |

Vtable |

Vtable.SST |

Link in the SST data.

./link_grib.csh /glade/campaign/mmm/wmr/wrf_tutorial/input_data/sst/rtg_sst_grb_hr_0.083.2016100

Link the correct Vtable (For SST input, use Vtable.SST).

Make the following changes to the &ungrib namelist record.

&ungrib prefix = 'SST',

Note

These SST data are available every 24 hours. Keeping interval_seconds=10800 allows ungrib to interpolate SST data to the temporal resolution of the GFS interval.

If, for e.g., the SST data were only available weekly, the namelist dates would be set to the two available files before and after the case dates, maintaining interval_seconds=10800 for interpolation.

Changing the prefix prevents the GFS output from being overwritten.

Run ungrib to create SST intermediate files (the prefix should be SST).

Metgrid¶

Modify the &metgrid namelist record to ensure metgrid uses both FILE and SST intermediate files:

&metgrid

fg_name = 'FILE', 'SST'

Now run metgrid, as usual.

Real & WRF¶

Edit namelist.input to match WPS settings, using the following considerations:

These GFS data have 32 levels. Set num_metgrid_levels = 32.

Set frames_per_outfile=1 to create a wrfout* file for each history output time. This is recommended for longer runs because it limits files size, making them more manageable.

Long runs typically require frequent starts/stops. Set restart_interval to at least once daily so you will have restart files (wrfrst*) available to use as a starting place for each restart run. This particular run is not a restart run, so set restart=.false..

Modify time_step based on the 90 km grid spacing. time_step should not be larger than 6xDX. To ensure stability, set this to 360.

This is a single domain simulation.

Using too few vertical levels for long simulations can cause systematic biases. 45 levels should be sufficient for this case.

Set e_we, e_sn, and the dates/times to match WPS settings.

Add the following to namelist.input in the &time_control and &physics records.

&time_control auxinput4_inname = "wrflowinp_d<domain>" auxinput4_interval = 180, io_form_auxinput4 = 2, &physics sst_update = 1,

Note

These additions set SST to update every 3 hours.

SST uses the auxillary stream 4, which requires a file name specification for the real.exe output file containing SST data, an interval, and the output format (2=netcdf).

Do not change the syntax for the auxinput4_inname parameter (i.e., do not replace <domain> with the actual domain number). WRF understand this syntax and will translate it to the correct file name.

Now you are ready to run real and wrf. When running wrf.exe, use the “runwrf_climate.sh” batch script.

Return to the Practice Exercise home to page to run another exercise.