Run the Model for a Two-way Nested Domain¶

When running WRF for two-way nesting, there are two options that can be chosen prior to the step for running the WRF model.

Use all input meteorological and static data from the nested domains

All static and meteorological data for the nest(s) are obtained from the nest’s input file. This is the method used for this exercise.Note

This is the recommended way to use two-way nesting.

Use only the input static data for nested domains

Only static data for the nest(s) are obtained from the nest’s input file. Meteorological data are interpolated from the coarse grid.Note

This option should only be used if the nest will be started at a later time.

This exercise will use the same severe storm outbreak over the midwest and southest United States case from the Single Domain exercise, but this time a higher-resolution nest is included.

Note

See WPS Namelist Variables from the WRF Users’ Guide for details about each parameter.

See namelist.wps Best Practices for additional information and suggestions.

Run the Geogrid Program for Two Domains¶

Recall the geogrid program defines the map projection, and the geographic location, size, and resolution of the domain. It also interpolates static fields (e.g., topography height, landuse category, etc.) to the domain.

Follow the steps below to configure the domains and create static files for two domains.

If the static geographic files have not already been downloaded, do this first. Unpack the file in a unique directory (e.g., in a directory called WPS_GEOG). Make sure to download the file for the “Highest Resolution of Each Mandatory Field.”

Move to the WPS directory.

Edit namelist.wps in the top-level WPS directory. Make changes to incorporate the following settings:

&share max_dom = 2, &geogrid parent_id = 1, 1 parent_grid_ratio = 1, 3 i_parent_start = 1, 36 j_parent_start = 1, 32 e_we = 100, 118 e_sn = 100, 103 geog_data_res = 'default', 'default' dx = 30000 dy = 30000 map_proj = 'lambert', ref_lat = 38 ref_lon = -90 truelat1 = 30.0 truelat2 = 60.0 stand_lon = -90 geog_data_path = 'path-to-static-files'

Note

Only some namelist variables require settings for both domains. If there is only a single setting for a variable in the default namelist (or in the list above), do not add another column - it will give an error when you try to run.

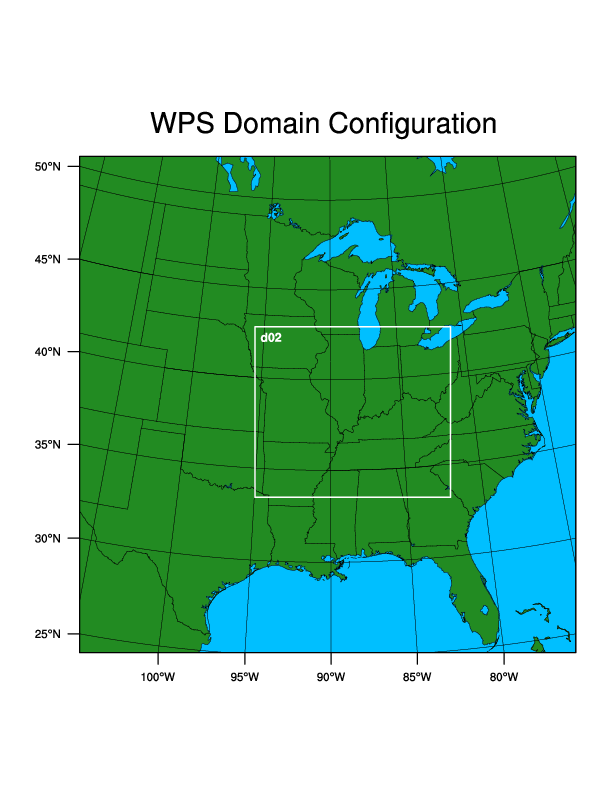

Check that the domain is in the correct location using the NCL plotgrids script, available in the WPS/util directory.

ncl util/plotgrids_new.ncl

If x-forwarding is installed, a window should pop up to the desktop with an image of the domain. It should look like the following:

Run geogrid to create static data files for these specific domains.

./geogrid.exe

Text will print to the screen as geogrid processes. Look for the following at the end of the prints:

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ! Successful completion of geogrid. ! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

There should now be a geo_em.d01.nc and geo_em.d02.nc file available in the WPS directory, as output from the geogrid program. It it is not successful, see the geogrid.log file created during the geogrid execution. Try to troubleshoot the errors. If unable to do so, see the WRF & MPAS-A User Support Forum to search for previous solutions to the issue, and if unable to find anything, post a new thread to the forum.

Feel free to look at the contents of the geo_em.d01.nc and/or geo_em.d02.nc file.

Use the netCDF ncdump utility:

ncdump -h geo_em.d02.nc

Use the netCDF data browser, ncview, to view field images from the files:

ncview geo_em.d02.nc

Ungrib¶

If you have already run the Single Domain exercise, and you still have the intermediate files created by ungrib.exe in that exercise, there is no need to run ungrib again. The ungrib program only processes the meteorological input data, which is not domain-dependent. If you have not gone through the Single Domain exercise, please go back and run the ungrib section before advancing to the next section.

Run the Metgrid Program for Two Domains¶

Recall that the metgrid program horizontally interpolates meteorological data (extracted by the ungrib program) to the simulation domains (defined by geogrid). This program creates input data files necessary to run the WRF model.

Follow the steps below to prepare for, and to run the metgrid program.

Ensure that the start and end dates are set correctly for the case, for both domains, and that fg_name in the &metgrid namelist record is set to FILE, which is the prefix of the intermediate files.

&share start_date = '2023-03-31_00:00:00 ', '2023-03-31_00:00:00 ', end_date = '2023-04-01_00:00:00 ', '2023-03-31_00:00:00 ', &metgrid fg_name = "FILE"

Note

end_date for domain 2 is equal to the start_date. Only the initial time is required for this domain because it receives all its boundary conditions from d01. This is okay as long as, during wrf.exe, sst_update will be turned off, and no nudging options will be used.

Run metgrid.exe to horizontally interpolate the meteorological input data to the domain.

./geogrid.exe

Text will print to the screen as metgrid processes. Look for the following at the bottom of the prints when metgrid is complete.

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ! Successful completion of metgrid. ! !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

If successful, the following files should now be available in the top-level WPS directory.

met_em.d01.2023-03-31_00:00:00.nc met_em.d01.2023-03-31_06:00:00.nc met_em.d01.2023-03-31_12:00:00.nc met_em.d01.2023-03-31_18:00:00.nc met_em.d01.2023-04-01_00:00:00.nc met_em.d02.2023-03-31_00:00:00.nc

Feel free to look at the contents of the met_em.d01*.nc and/or geo_em.d02.*.nc file(s); for e.g.,

Use the netCDF ncdump utility:

ncdump -h met_em.d02.2023-03-31_00:00:00.nc

Use the netCDF data browser, ncview, to view field images from the files; for e.g.,

ncview met_em.d02.2023-03-31_00:00:00.nc

Run the Real Program¶

Recall that the real program defines the WRF model vertical coordinate. It uses horizontally-interpolated meteorological data (met_em* files from wps) and vertically interpolates them for use with the WRF model. It creates initial condition files and a lateral boundary file that will be used by WRF.

Follow the steps below to vertically interpolate the input data to the model coordinates.

Move the WRF/test/em_real directory.

Link the metgrid output files for both d01 and d02 to the em_real directory.

ln -sf path-to-WPS/met_em* .

Edit namelist.input (available in the em_real directory). Make changes to incorporate the following settings:

Note

For the settings that are domain-dependent, there is no need to remove the settings for columns 3+, as the max_dom parameter indicates to the model that it only needs to process two domains.

See WRF Namelist Variables from the WRF Users’ Guide for details about each parameter.

See namelist.input Best Practices for additional information and suggestions.

&time_control run_hours = 24, start_year = 2023, 2023, start_month = 03, 03, start_day = 31, 31, start_hour = 00, 00, end_year = 2023, 2023, end_month = 04, 04, end_day = 01, 01, end_hour = 00, 00 interval_seconds = 21600 input_from_file = .true.,.true., history_interval = 180, 180, frames_per_outfile = 1, 1, restart = .false., restart_interval = 72000, &domains time_step = 180 max_dom = 2, e_we = 100, 118 e_sn = 100, 103 e_vert = 45, 45, num_metgrid_levels = 34 num_metgrid_soil_levels = 4 dx = 30000 dy = 30000 grid_id = 1, 2, parent_id = 0, 1, i_parent_start = 1, 36 j_parent_start = 1, 32 parent_grid_ratio = 1, 3, parent_time_step_ratio = 1, 3,

Note

Only some namelist variables require settings for both domains. If there is only a single setting for a variable in the default namelist (or in the list above), do not add another column - it will give an error when you try to run.

Run real.exe.

Depending on how WRF was installed (e.g., for serial or dmpar computation), the command to run real.exe will differ. Additionally, if using dmpar (parallel processing) on a cluster, it is possible a batch script is required to submit the command. Within that batch script, the same type of MPI command will be used. Use something similar to the following (it may be necessary to ask a systems administrator at your institution for guidance on the proper command for your machine/environment).

For parallel-processing (dmpar)

mpiexec -np X ./real.exe

The X in the above command indicates the number of processors to use. real.exe is a quick process and probably does not need a lot of processors - especially for this fairly small simulation. An appropriate number would be somewhere between ~1-50.

For serial processing (using a single processor)

./real.exe >& real.log

The error and output will go to the real.log file for serial computation, and for parallel computation, there will be an rsl.out and rsl.error file available for each processor. Check the end of those files for the “SUCCESS” message to ensure real.exe ran correctly. If successful, the output files wrfbdy_d01, wrfinput_d01, and wrfinput_d02 will be available in the em_real directory.

Run the WRF Model for Two Domains¶

Recall that the WRF model uses the intitial and boundary condition files generated by the real program to perform model integration, using user-specified options provided in the namelist.input file (e.g., physics options).

Run wrf.exe

Just as with real.exe, depending on how WRF was installed (e.g., for serial or dmpar computation), the command to run wrf.exe will differ.For parallel-processing (dmpar)

mpiexec -np X ./wrf.exe

The X in the above command indicates the number of processors to use. An appropriate number of processors for this particular simulation would be somewhere between ~1-50. wrf.exe is a more computationally-intensive process than real, so the number of processors matters more. See Choosing an Appropriate Number of Processors.

For serial processing (using a single processor)

./wrf.exe >& wrf.log

Note

Running a 24-hour simulation for this nested case could take 10+ minutes to complete. To watch the progress, issue

tail -f rsl.out.0000.The error and output will go to the wrf.log file for serial computation, and for parallel computation, there will be an rsl.out and rsl.error file available for each processor. Check the end of those files for the “SUCCESS” message to ensure wrf.exe ran correctly. If successful, the output files wrfout_d01_YYYY-MM-DD_hh:mm:ss and wrfout_d02_YYYY-MM-DD_hh:mm:ss will be available in the em_real directory.

Since history_interval=180 in the namelist, this means that history is printed out every three hours, and because frames_per_outfile=1, there will be one file per history_interval. This means there should be 16 total wrfout files, starting with the initial time of the simulation.

To look at the contents in the wrfout files, use either the netCDF ncdump utility, or the ncview tool.